Have you ever come across the Excluded by Noindex tag in the Google search console? If so, what actions do you take?

We checked with multiple SEO practitioners; many exclude this issue on the lighter side. Few spend time fixing the problem.

But you should be clear about why this issue has occurred. Are all the web pages marked “noindex” in the HTML robots tag, or is there any other internal problem causing them?

In this article, we will share all the secrets behind this and give you some fixing methodologies.

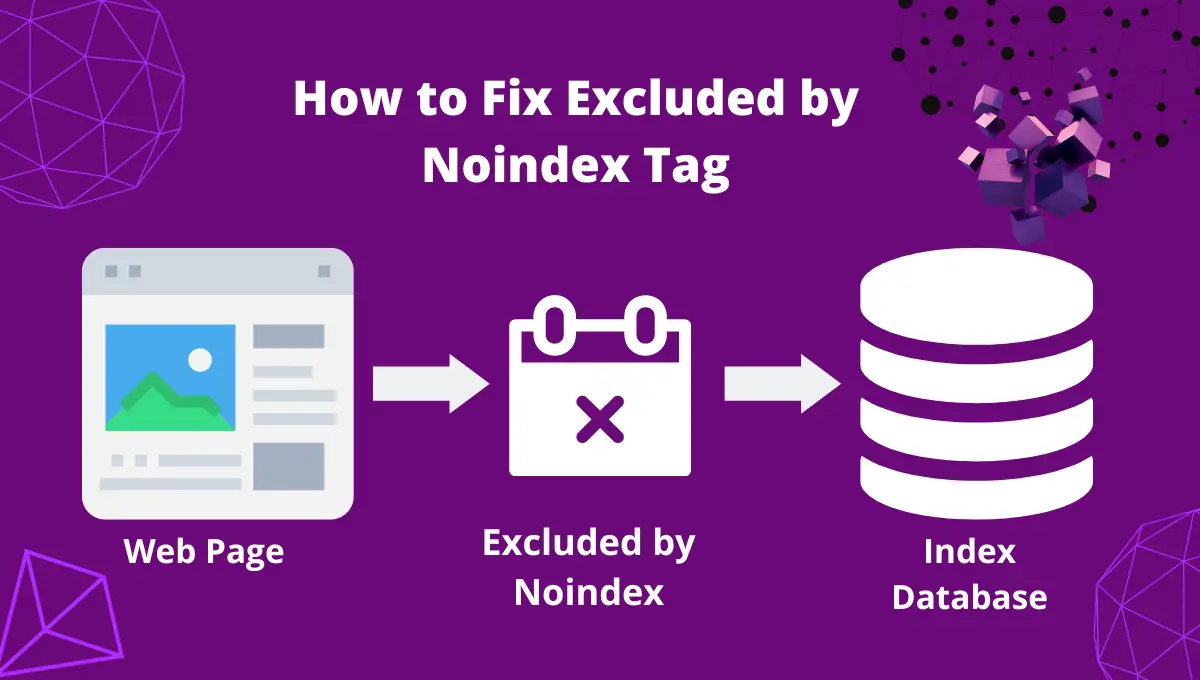

What is Excluded by Noindex Tag?

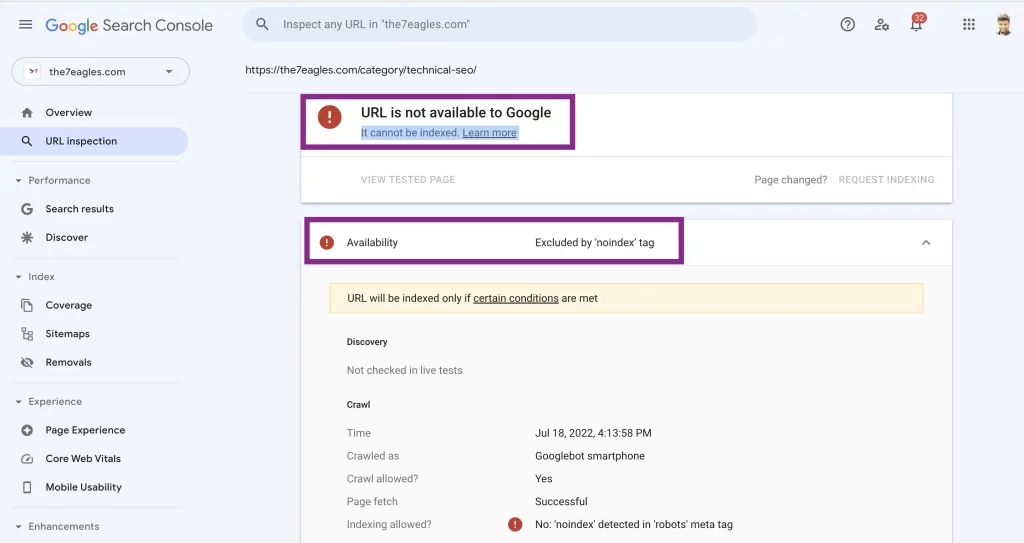

This common Google Index coverage issue occurs when the “noindex” robots tag excludes a web page from indexing.

Usually, web admins exclude a few pages, like category, pagination, etc., from indexing to save crawl budget.

If such web pages are excluded from indexing by the ‘excluded by the noindex’ tag, then it’s fine. If not, the problem is serious and might affect the potential web page ranking.

How to Find Excluded by Noindex Tag Web Pages?

You can check this Google index coverage report from the above images of the Google search console (GSC).

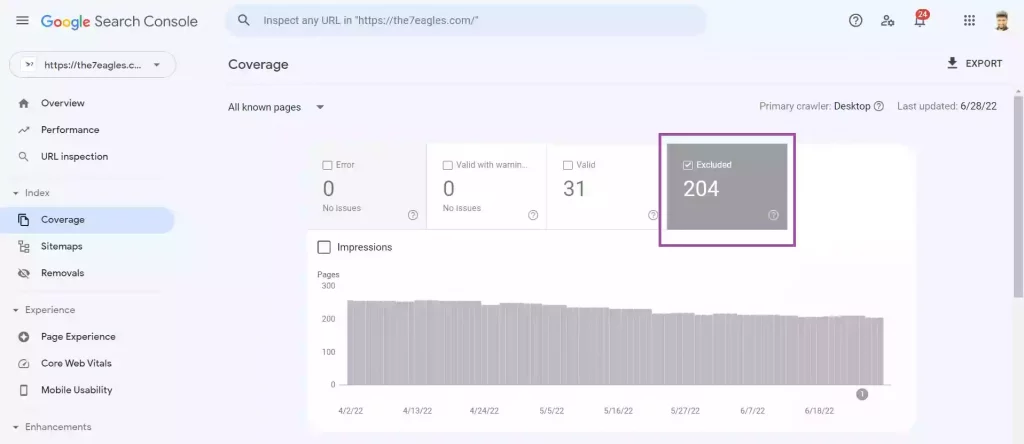

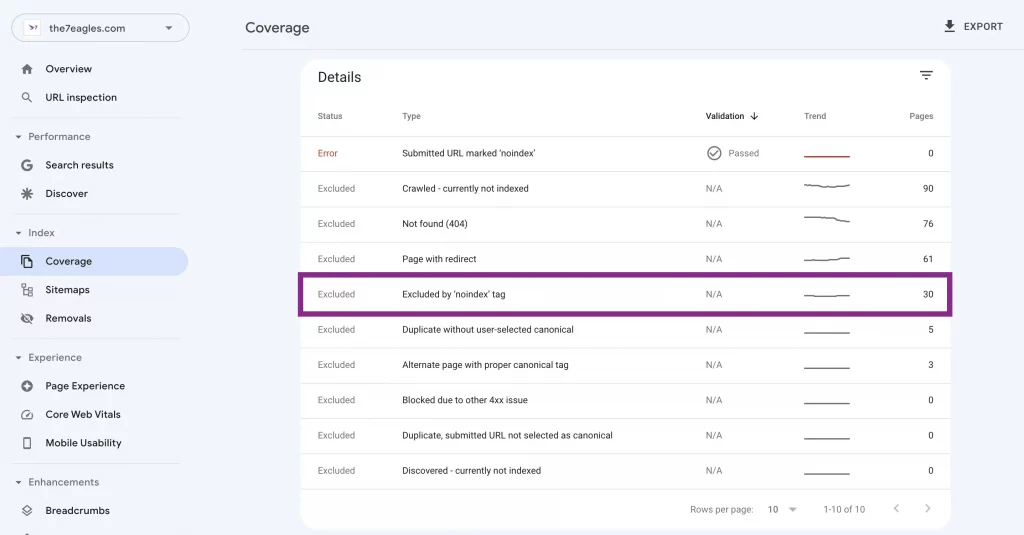

Under GSC, select coverage, you will be provided 4 options on the right side. Error, Valid with warning, valid, and Excluded.

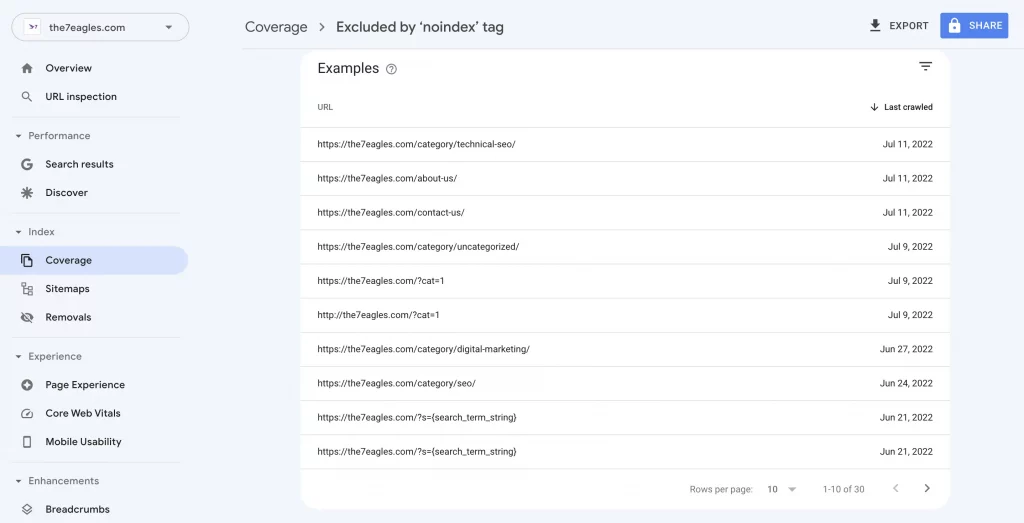

To get the details about the issue behind the excluded by the noindex tag, select an excluded option. You will get the list of URLs, as seen in the third image.

Reasons that Causes Excluded by Noindex Tag:

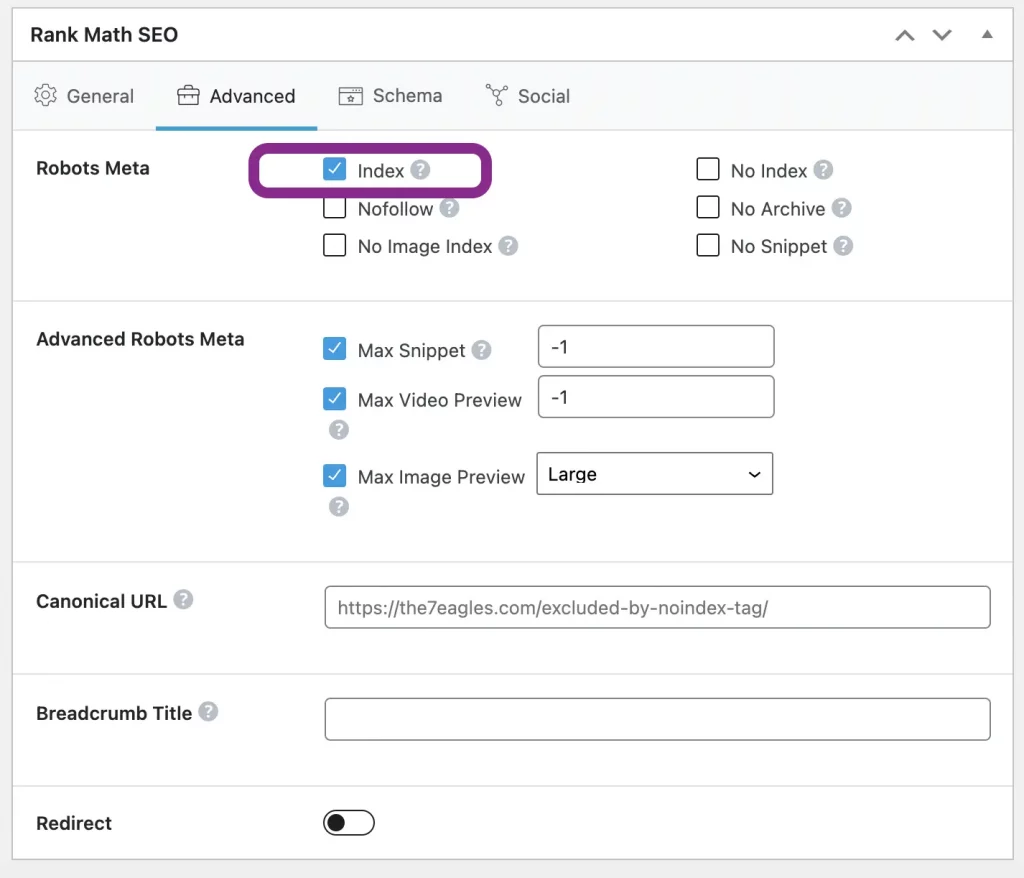

Web Pages marked Noindex in Meta Robots Tag:

The important reason for this coverage issue is when a web page is marked ‘noindex’ in the meta robots tag.

This is common, and if the potential page is not marked under ‘noindex,’ you can relax. It won’t hurt your SEO or ranking.

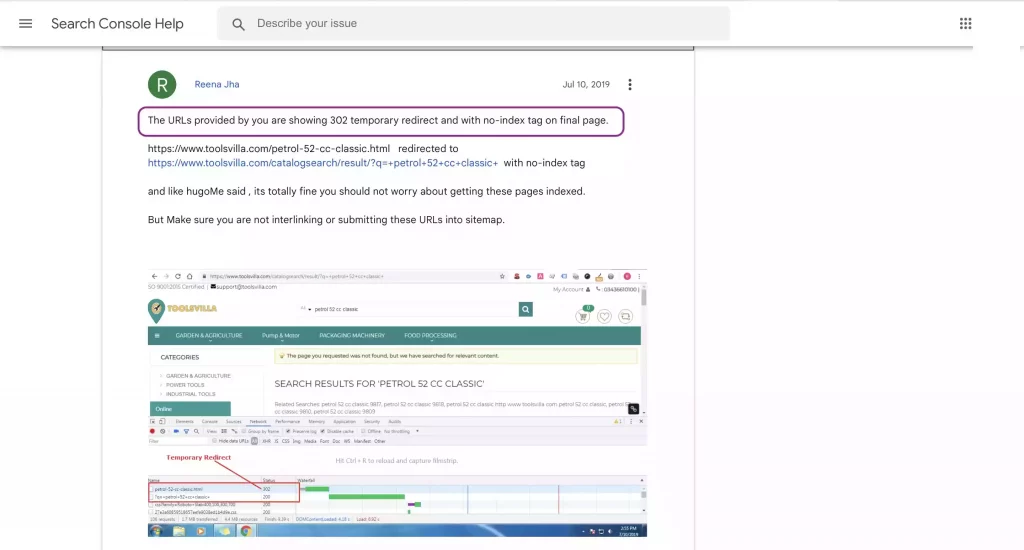

Redirected Page Might have Noindex Robots Tag:

When you redirect (301/302), a web page can also be excluded by a noindex tag when the destination web page is marked ‘noindex’ in the robots tag.

This issue was discussed in the search console help forum, where a web page was excluded from indexing because of redirection (302).

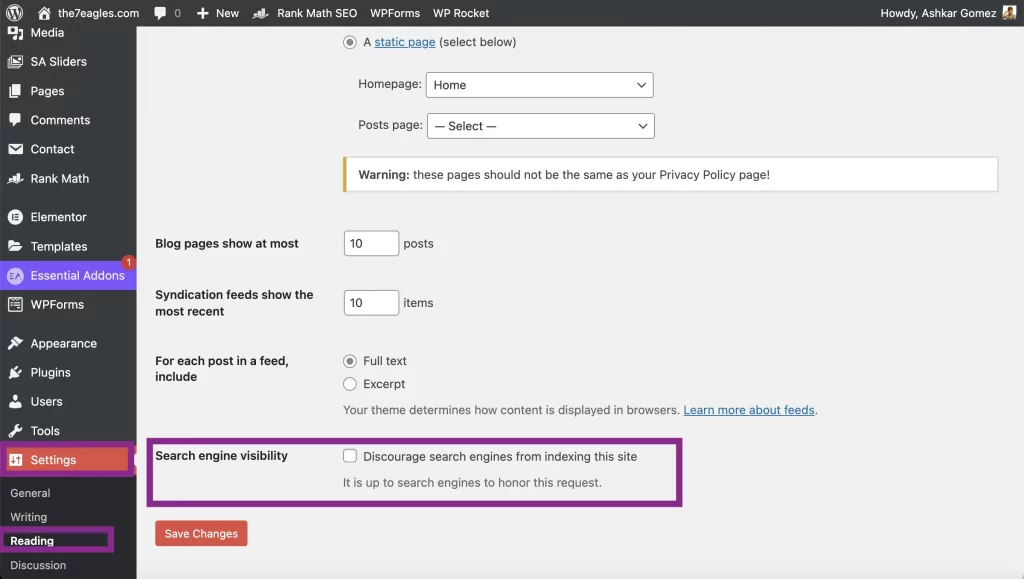

CMS Settings Discouraging Search Engine Visibility:

This setting of discouraging websites from search engine visibility is available in most CMS (Content Management System).

Above, we have attached the settings of WordPress.

This option is actually switched on while developing a website to prevent it from indexing until the website is launched.

Unfortunately, this setting wouldn’t have been switched off, making the website excluded from indexing.

Malfunction caused by Plugins

Few plugins can cause an issue in root codes that trigger robots tag to be noindex, even after editing through SEO plugins like Rankmath or Yoast.

This issue happens when you have security plugins like iTheme security. It can be the same when you use a cracked version of any plugin.

Coming back to the security plugin, why do these plugins cause an issue? These plugins run as firewalls.

Third-party servers host these firewalls, and they have x-robots tags. If this x-robots tag has a ‘noindex’ robots command, it’s a waste of time to change the base code or the plugins.

Looking to Fix Excluded by ‘robots’ tag? You’re just away from a mail!!!

How to Fix Excluded by Noindex Tag Issues?

Before learning how to fix this issue, you should look at a few parameters.

- Are the web pages under this exclusion marked ‘noindex’ robots tag by you?

- Does the affected page have ranking potential or a target web page?

When you see potential pages get affected, you should focus on fixing them spontaneously. If not, focus on other issues that need immediate attention.

Here are a few ways to fix this issue.

Check & Fix the Robots Tag of the Web Page:

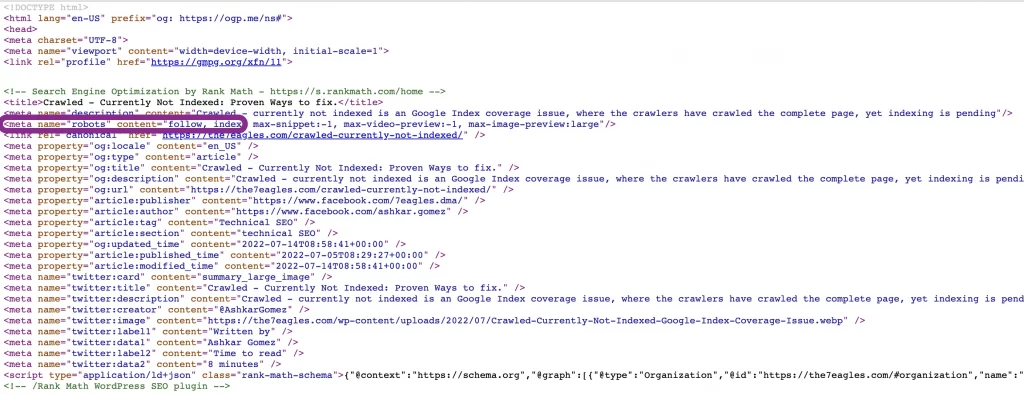

You can check the details of source codes of any web page by making a right click and selecting view page source.

You will open a page just like the image shown above.

Here, you can check the robots tag of the web page. It appears as follows,

meta name="robots" content="index"/>

If the robots tag is ‘index’, then use the Google search console to request manual indexing.

If robots tag appears as below,

meta name="robots" content="noindex"

your robots tag is noindex, and you can use these steps to fix it.

Remove Redirects to Noindex Pages:

If you find out any web page responds with code 301 or 302, and the destination page is noindex, you must remove the redirection.

If the destination page has a ‘noindex’ robots tag, it might also affect the redirecting web pages.

Fix X-Robots Tag:

When your website is hosted in a third-party server like Cloudflare firewall or CDN, you must fix the X-Robots tag as ‘index’ before setting it in the base file.

Our advice would be not to use cracked plugins that could cause severe damage to indexing.

Make Sure your CMS encourage Search Engine Visibility:

Always avoid discouraging the website’s visibility to search engines in the CMS setting.

This could be a reason for web pages being tagged under excluded by noindex tag.

Conclusion:

- Excluded by robots tag is one of the most common index coverage reports that appear in the excluded part of Google Search Console.

- This issue often happens when your web pages are mapped under ‘noindex’ in the robots tag.

- If you have mapped them in ‘noindex,’ this is not an issue, just a notification.

- When you find out a potential page mapped under ‘noindex,’ you must fix them immediately.

- I hope the reasons and fixing methods we discussed were useful for you.

- If you need any technical SEO assistance, you can make an appointment with our team.