Updated on March 12/2024

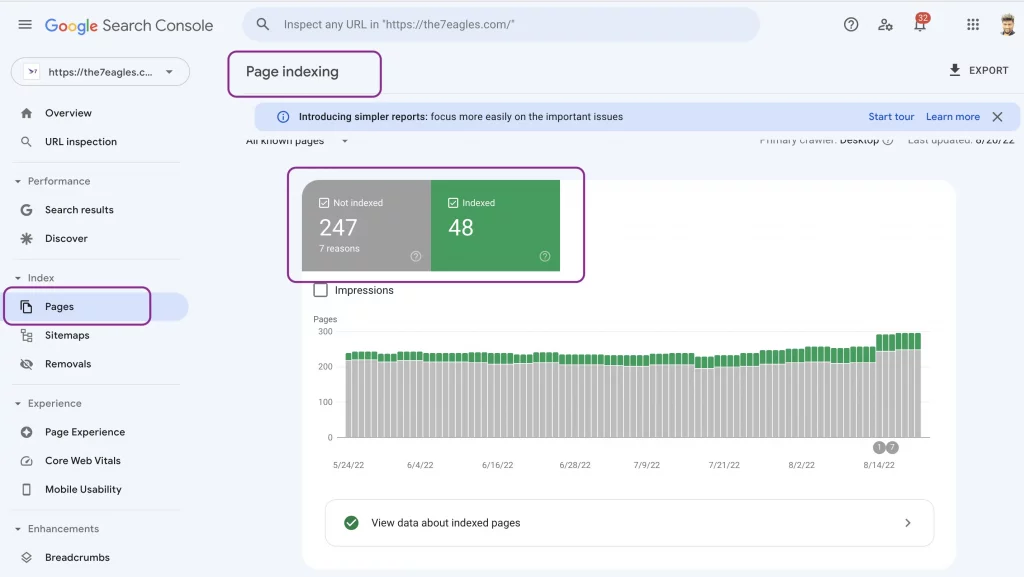

Worried about technical errors and how to fix them? The Google Index Coverage Report covers all Crawling and Indexing Issues on your website.

You can find feedback and fixes on the crawling and indexing of your website in the Index Coverage report in Google Search Console.

They notify others if they notice a serious problem. However, these signals are frequently delayed, so you shouldn’t rely only on them to find out about serious SEO difficulties.

What are the Common Google Index Coverage Reports:

The most common Google Index coverage issues that appears in Google Search Console are as follows

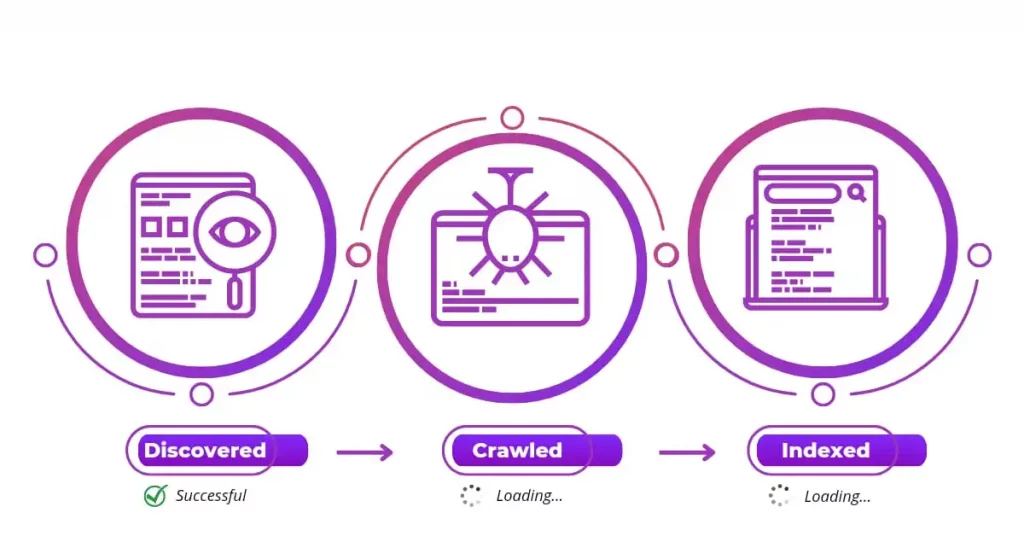

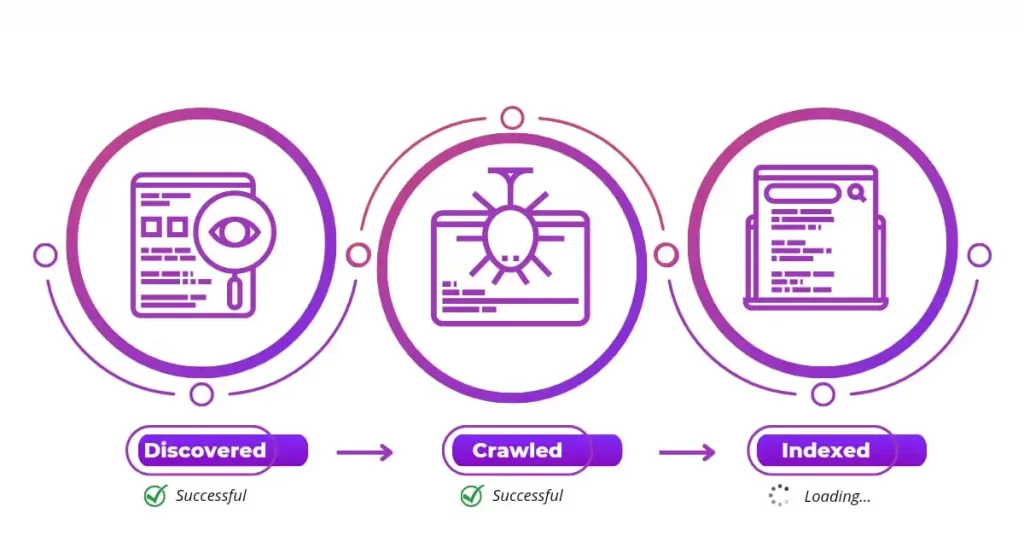

Discovered - currently not indexed

When Google has discovered the page, not crawled or indexed, the coverage report is discovered as currently not indexed.

Google delayed the crawl because it normally wanted to crawl the URL but anticipated that doing so might cause the site to become overloaded.

This explains why the last crawl date on the report is blank. One of the status values listed below may be present on each page:

Page not indexed is the error. For further information about the mistake and how to fix it, refer to the explanation of the individual error type. You should initially focus on these matters.

- Valid: Indexed pages

- Error: Pages that, for whatever reason, couldn’t be indexed

- Valid with Warning: You should be informed that although the page is indexed, there is a problem

- Excluded: Although the page isn’t indexed, we believe that was your purpose

What results in this situation?

- Site content appeared to be overloaded

- Server Overloaded

- Internal link structure might be low

- Content quality seems to be low

Crawled - currently not indexed

Crawled but not yet indexed – Google crawled the page; however, it was not indexed. No need to submit this URL again for crawling; it might or might not be indexed in the future.

- Google’s default user agent type for crawling your website is displayed in the primary crawler value on the summary page.

- The options are smartphone or desktop; these crawlers represent visitors using a mobile device or a desktop computer.

- Google uses this main crawler type to crawl every page on your website.

- The other user agent type, the secondary crawler, also known as an alternate crawler, may also be used by Google to crawl a portion of your pages.

- For instance, if your site’s primary crawler is a smartphone, its secondary crawler is a desktop, and vice versa if your primary crawler is a desktop.

- A secondary crawl aims to gather more details about how users of different device types interact with your site.

Not Found (404):

- Pages you specifically asked Google to index but could not locate are 404 error pages. This is a bug.

- Generally speaking, we advise correcting only 404 error pages rather than 404 excluded pages.

- Pages with a 404 error are ones that Google found through another method, such as a link from another website.

- You should return a 3XX redirect to the new page if the page has been moved or use the 410 commands to remove the page completely from the index.

Soft 404:

A soft 404 error occurs when a URL returns a page informing the user that the requested page is unavailable together with the response code 200 success.

In other circumstances, it could be an empty page or a page without any main content.

Such pages may be produced for several reasons by the web server, content management system, or user’s browser of your website. For instance:

- A server-side included file is missing.

- A database connection that isn’t working.

- A page with no results from an internal search.

- A JavaScript file that is missing or not loaded.

Returning a 200 success status code but then displaying or suggesting an error message or another type of issue on the website is a terrible user experience.

Users may believe the page is active and functional before seeing an error. Search does not index these pages.

The Index Coverage report for the site will display a soft 404 error when Google’s algorithms determine that the page is genuinely an error page based on its content.

Fixing Soft 404 requires to work on helpful content, fixing the bugs in the server-side, themes and plugins.

Submitted URL marked "noindex":

This is an Index coverage error that defines the submitted URL in the XML sitemap containing noindex meta robots tag directives.

The next scenario is the page to be indexed; it contains a “noindex” directive, either in a robots tag or X-robots HTTP header.

When any of these above scenarios are crawled by Google bot, the web pages are excluded as submitted URL marked noindex.

Here are a few ways to fix this error:

- Change the Robots tag to index, if the marked web page is a ranking potential.

- Following this, do a URL inspection of the same web page for manual indexing requests.

- If the page is to be “noindex,” then remove the web page from sitemap.xml. If the sitemap is not updated to the current website status, remove the cache from the sitemap.xml.

- Then, resubmit the sitemap.xml into Google Search Console.

- Finally, use Validate Fix, and let Google crawlers validate and pass the errors.

Page with redirect:

- Users and search engines might be sent to a different URL from the one they initially requested by using redirects.

- If you see a “Page with redirect” comment in your Coverage report, it means that Google found a page on your website with a redirected URL but did not index it.

- Due to faults or to prevent duplicate results, Google removes pages like these from search results.

- Google did not index the page when it attempted to index it because of a “noindex” request.

- If you don’t like to index the particular page, remove that “noindex” directive if you want this page to be indexed.

- Request the website in a browser and look for “noindex” in the response body and response headers to verify the existence of this tag or directive.

Alternate page with proper canonical tag:

- This page is a copy of another page that Google considers the official one. There is nothing you need to do because this page accurately points to the canonical page, these types of issues are found in GSC as an “Alternate page with the proper canonical tag“.

- Google has discovered duplicate pages correctly canonicalized when it sees “Alternate page with the suitable canonical tag.”

- Immediately dismissing pages with this status, SEOs get on with their day.

- However, where things get interesting, there might be pages that you should have indexed.

- Additionally, if you notice many sites with this problem status, your internal link structure may be weak, and there may be hidden crawl budget difficulties.

- The URL is a group of duplicate URLs that lack a designated canonical page.

- Although you specifically requested that this URL be indexed, Google did not do so since it is a duplicate and considers another URL a better candidate for canonicalization.

- As an alternative, we index the canonical that we choose.

- In a collection of duplicates, Google only indexes the canonical.

- In this case, indexing has been requested specifically as opposed to “Google chose a different canonical than the user.“

- The Google-selected canonical URL should be visible while inspecting this URL.

- Error in GSC will be Duplicate without user-selected canonical, It says that there are duplicates of the page, but none of them is canonical.

- This page, in our opinion, is not the official one.

- For this page, you should formally mark the canonical.

- The Google-selected canonical URL should be visible while inspecting this URL.

- This indicates that Google bots could not access your submitted URLs due to receiving a 4xx response code, which is an error other than the previously mentioned 401, 403, and 404 errors and can range from 400 to 451.

- Any other issue type mentioned here does not apply to the 4xx error that the server encountered.

- To identify the precise issue, use the URL Inspection Tool. The most typical methods for correcting 4xx mistakes are as follows:

- Deleting the cookies and cache in your browser.

- Examining the URL for spelling mistakes.

- Just reloading the page.

- Refusing to submit or exclude the page from indexing.

- A robots.txt file was used to stop Googlebot from accessing this website. You can use the robots.txt tester to confirm this.

- Be aware that this does not exclude the page from being indexed by another method.

- Google may still index the page if it can locate other information without having to load it though this is less common.

- Remove the robots.txt block and use a “noindex” directive to prevent Google from indexing a page.

- Check out our article on fixing blocked by robots.txt issue.

Wrap Up:

These are some of the common technical terms found in Google index coverage reports, remember these common types of errors and related fixes when working on your website or client website.

If you need help in fixing technical errors, Go through our Technical SEO services, request a quote and we are ready to help.

Frequently Asked Questions

To fix Google Index coverage issues, check your Google Search Console for page indexing errors, you will find the indexing errors, open the page with errors, and resolve them using appropriate methods.

For example, If the error specifies Not Found (404), you can delete the page or use the 410 commands to remove the page from indexing

Index Coverage Report is a report in the Google Search Console that specifies the crawling and indexing status of web pages on the website.

Google removes indexed pages due to low-quality content, technical issues like broken links, security risks such as malware, violations of guidelines, noindex directives, and upon removal requests.

The Google index removal tool allows website owners to request the removal of specific URLs from Google’s search index. It’s useful for removing outdated or sensitive content quickly. However, it’s temporary and doesn’t guarantee permanent removal