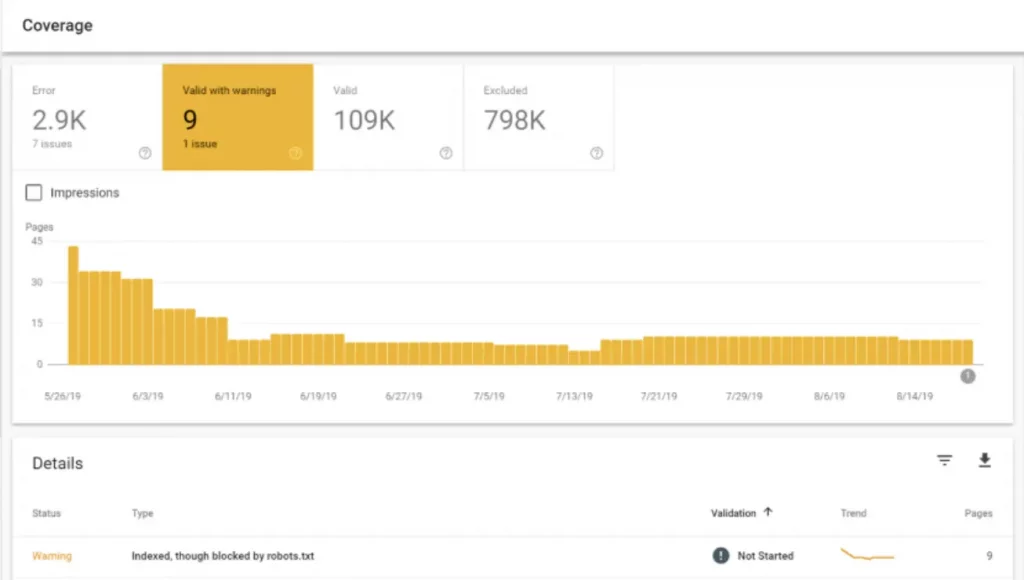

Multiple Index coverage issues could exclude your website and web pages from indexing. Have you come across content that is indexed though blocked by robots.txt under valid with warning in Google Search Console?

Technical errors in the base file or robots.txt or user-agent could block the indexed web pages.

In such a case, the indexed web page appears in a search snippet without a description. The SERP denotes the description as:

A description for this result is not available because of this site’s robots.txt.

We will share complete detail on what is this coverage issue, what are the reasons, and ways to fix the issue.

What Does Indexed Though Blocked by Robots.txt Mean:

Google has confirmed that web pages can be indexed even if they are blocked by robots.txt, if internal or external web pages refer to them.

When a web page blocked by robots.txt has one or more referring web pages, it comes under the Google coverage issue indexed though blocked by robots.txt.

This Index coverage issue appeared in the Google Search Console when commended a web page for being blocked by robots.txt, yet it is indexed.

It confuses Google whether the web page has to be indexed or excluded from indexing. So, the web page is indexed with a warning (valid with warning).

Here are the ways to check the web pages with this index coverage issues:

Step 1 – Login Google Search Console, and select your property.

Step 2 – Select coverage to check all the index coverage reports.

Step 3 – Check valid with warning, to get the details of web pages under this issue.

What Is Robots.txt?

Robots.txt is a text file with an extension of .txt that must be included in a website’s base file. This file commands instructions to crawlers on what pages to exclude from crawling and what crawlers should exclude from crawling the website.

Robots.txt has 4 important components. User-agent, allow, disallow, sitemap.xml.

Robots.txt appears as follows:

user-agent: *

allow: /

disallow: /employees/

sitemap: https://yourdomain.com/sitemap.xml

Reasons That Could Cause This Coverage Issue?

Before moving down to the reasons for this issue that warns indexing, you should validate the web page under two conditions:

- Is the web page should be blocked from crawling?

- Should this web page be indexed?

For this, you should export the details of web pages from Google Search Console. Then, start the validation process.

Potential web page blocked by Robots.txt

The page to be indexed will be referred by internal and external web pages (backlinks). This coverage issue happens if your robots.txt blocks this web page from crawling.

Web pages blocked by Robots.txt with referring pages:

When the web page is blocked by robots.txt, it commands crawlers to skip crawling for that page.

If internal or external pages refer to the web page, Google will index the page but still be disallowed by the crawler.

This is another reason for this coverage issue and vice versa of the reasons above.

Wrong URL format

These issues could generally arise CMS (content management system), as they create a URL that doesn’t have any page. It could be a search attribute attached to the URL. Like,

https://yourdomain.com/search=?seo

Crawlers will skip these URLs from crawling due to improper structure of the URL. If you have useful content that could serve the purpose of the users, then the URL should be changed.

Meta Robots Tag directives blocking a page from indexing

This might also be a reason for this coverage issue. Apart from Robot.txt, noindex robots tag directives also can cause this issue.

Similarly, this coverage issue arises when you discourage your website from being visible to search engines.

How to fix indexed though blocked by Robots.txt?

Here are a few steps that help in fixing this coverage issue.

Step 1 – Export all the URLs from the Google Search Console.

Step 2 – Validate the web page to be indexed and web pages not to be crawled.

Step 3 – If the web page is to be excluded from crawling, then check whether any internal or external web pages refer to this web page.

Step 4 – If you find any web pages referring, please remove the links that point to the page that has to be excluded from crawling.

Step 5 – If you need a page to index, and it has indexed with this error, then you should validate the robots.txt.

Step 6 – Use Robots.txt tester tool to check whether the page is crawlable or blocked by robots.txt.

Step 7 – If you find that the web page is been blocked to crawl by search engine bots, then it’s time to fix the robots.txt.

user-agent: *

allow: /

disallow: /employees/

sitemap: https://yourdomain.com/sitemap.xml

Step 8 – In robots.txt, you have to validate user-agent, whether it blocks any crawler to crawl the complete website.

Step 9 – If it allows all crawlers to read the website, then you should check the disallow command, whether it blocks crawling. If so, remove the disallow command for that web page.

Step 10 – Also, validate the robots tag directive to index the web pages.

Step 11 – Once you have fixed this issue by all possible steps, do validate fix in Google Search Console.