There are two directives that search engine crawlers undergo the commands before crawling and indexing. They are Robots.txt and Robots Meta Tag.

Robots.txt helps in command to allow or disallow web pages or websites for every crawler.

In contrast, meta robots tag commands crawler to index or exclude from indexing, passing the link equity.

Let’s start consuming the complete concept of the robots tag and X-Robots-tag HTTP header.

What Is Robots Meta Tag?

The robots tag is a meta directive code that is present in the head (<head>…</head>) section of the HTML file of any web page. This HTML code helps crawlers to either index or noindex the web page.

Meta directives provide a set of instructions to crawlers on how to and what to crawl and index. Any website or web pages without these directives will likely be indexed or end in unwanted indexing issues.

Both Robots.txt and meta robots tag have a role in directing the work of crawlers, yet they both conflict with each other in the process.

At the same time, robots.txt is a file that should be included in the base file of the website, it should contain directives and an XML sitemap. In contrast, a meta robots tag is just a piece of HTML code.

It appears in the HTML file as follows:

<head>

<meta name="robots" content="index,follow">

</head>

There are two types of Robots meta directives. One is the meta robots tag, and the other is the X-Robots Tag in the web server’s HTTP header.

Why Robots Meta Tag is used in SEO?

The HTML robots tags are used to include or exclude web pages from indexing in search engines.

Here are important roles that robots meta tags help web pages to prevent indexing,

- Webpages at the staging environment.

- Thin pages or duplicate pages

- Admin and login pages.

- Add to cart, initiate checkout, and thank you page.

- Landing pages that are used for PPC campaigns.

- Search pages of the website.

- Promotional, contest, or product launch pages.

- Internal and sensitive data web pages.

- Category, tag web pages.

- Cached web pages.

You should understand the complete concept of attributes, directives, and snippets to instruct the crawlers as per webmaster guidelines.

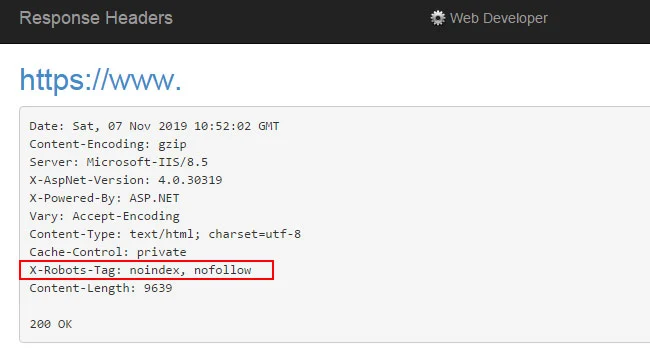

What Is X-Robots Tag?

Meta robots tag allows crawlers to control the index/noindex of a web page, but the x-robots-tag can prevent the whole website or part of web pages from indexing, as it is present in the HTTP header of the server file.

X-robot tags are usually accessible in server files, header.php, or .htaccess files.

Both robots tag and x-robots-tag use the same directives like index, noindex, follow, nofollow, nosnippet, noimageindex, imageindex, etc. Compared to meta tags, x-robots have more specific and flexible functions.

The x-robots can execute crawl directives on non-HTML files, as they use regular expressions with global parameters.

Consider using the x robots tag in the following conditions:

- You want to prevent the indexing of images, videos, or pdfs on a page, not the web page itself.

- To index or exclude from indexing any web page file other than HTML codes.

- When you don’t have access to edit or moderate the Head of the HTML file, you can access the X-Robots tag from the server to include the directives.

<Files ~ "\.videos$">

Header set X-Robots-Tag "noindex, follow"

</Files>

Robots Meta Tag Directives:

To move forward in understanding the directives, let’s look at the structure of a meta robots tag once again.

<meta name=”robots” content=”index,follow”>

In this, you have two attributes, and all the directives used are to be specified under these two attributes.

- Name

- Content

Name Attributes:

The name attributes share command for which or what crawler the following robots tag HTML codes applies. It acts the same as the user-agent on robots.txt.

name=”robots” -This command is for all the crawlers accessing the web page.

name =”Googlebot” – The command is only for Googlebot.

Most SEO experts commonly use the “robots” directive in Name attributes that the meta tag applies and controls all the crawlers.

The code can have a number of lines when the name attributes are used for specific crawlers or user agents.

Here are the most common name attributes or user agents used worldwide.

Robots -> All crawlers

Googlebot -> Google Crawlers for Desktop

Googlebot-new -> Google News crawlers

Googlebot-images -> Google Image crawlers

smartphone Googlebot-mobile -> Google Bot for Mobile

Adsbot-Google -> Google Ads Bot for Desktop

Adsbot-Google-mobile -> Google Ads Bot for mobile

Mediapartners-Google -> Adsense Bot

Googlebot-Video -> Google Bot for videos

BingBot ->Crawlers by Bing for both Desktop and Mobile

MSNBot-Media ->Bing bot for crawling images and videos

Baiduspider -> Baidu crawlers for desktop and mobile

Slurp -> Yahoo Crawlers

DuckDuckBot -> DuckDuckGo Crawlers

Content Attributes:

The content attributes are the command that instructs the crawler mentioned in the name attributes.

It would help if you had a deep understanding of the various robots tag directives to follow the perfect SEO strategy. The default value as per the meta robots tag is “index, follow.”

Here are the commonly used directives in content attributes.

all -> It is the same as the default setting commanding to index, follow (can be used as a shortcut)

none -> Shortcut for noindex, nofollow

index -> Commands to index the web page

noindex -> Instruct the name (user agent) to exclude the web page from crawling

follow -> Helps crawlers discover new web pages that are linked and pass link equity

nofollow -> Blocks crawlers to find new web pages and the link equity

nosnippet -> This command excludes meta description and other rich results visible in SERP

noimageindex -> Instructs the crawlers not to index images on the web page

noarchive -> Avoid showing a cached version of the page in SERPs

nocache -> same as noarchive but used only for MSN

notranslate -> Instructs the crawlers not to display the translated version of the web page in SERP (Search Engine Results Page)

nositelinkssearchbox -> This command doesn’t allow to show the search box of the website in SERP

nopagereadaloud -> Excludes the crawlers not reading the web page during a voice search

unavailable_after -> Instructs crawlers to de-index the web page after a particular time

Noodyp/noydir -> By using this tag, search engines are prevented from using the DMOZ description as the SERP snippet

You can check the complete list of all the directives supported by Google and Bing

Meta Robots Tag Examples:

Here are examples of the robots meta tag that can enhance SEO practices.

1. Index and follow the link to other pages:

<meta name="robots" content="index, follow" />

or

<meta name="robots" content="all" />

2. Index but don’t allow to follow the link:

<meta name="robots" content="index, nofollow" />

3. Don’t index and don’t allow to follow the link:

<meta name="robots" content="noindex, nofollow" />

or

<meta name="robots" content="none" />

4. Don’t index but allow to follow the link to other pages:

<meta name="robots" content ="noindex, follow" />

Role of Snippets and Directives in Robots Meta Tag:

The robots tag is not only to control indexing and allow crawlers to follow the link but also helps in maintaining the visibility of snippets in SERP.

Here are the most common snippet directives used in meta robots tag.

nosnippet -> This directs to exclude the display of snippets or meta descriptions in SERP.

max-snippet:[number] -> This snippet helps control the maximum number of characters a snippet can hold.

max-video-preview:[number] -> This snippet helps display the video in SERP and contains the duration limit.

max-image-preview[setting] -> The code snippet instructs to specify the maximum size of an image preview either as “none,” “standard” or “large.”

How to use the snippet directives in the robots meta tag?

1. Don’t show the snippets of the web page in SERP:

<meta name:"robots" content="nosnippet" />

2. Set a maximum character in the description of the web page:

<meta name="robot" content="max-snippet:156" />

3. Set the size of the image and preview it at SERP:

<meta name="robots" content="max-image-preview:large" />

The image size can be none, standard, and large

4. Set the duration in seconds and the video’s visibility in SERP.

<meta name="robots" content="max-video-preview:20" />

5. Using all the snippets in a single code:

<meta name="robots" content="max-snippet:156, max-video-preview:20, max-image-preview:large" />

Use commas to separate each snippet when combining each snippet in a single code.

Best Practises to Optimize Robots Meta Tag:

Crawling and indexing have a huge role in making your content visible in SERP that’s the goal of every SEO expert. While working on a huge website, the crawl budget has a role in the indexing of the potential web pages.

Robots tag has a huge role in crawl management, and here are a few tips that you need to optimize the meta robots tag that doesn’t harm crawling and indexing.

Never add Robots Tag Directives to the web pages blocked by Robots.txt

If any page is disallowed in robots.txt, search engines usually exclude the web pages from crawling. Simultaneously, the crawlers also read the directives of the web pages as mentioned in the robots meta tag and X-robots tag.

So, ensure the robots tag directives should not instruct to index the web page. As it disables the directives instructed by robots.txt.

So, we recommend never adding any meta robots tag directives to the web page that are blocked by robots.txt

Never use Meta Robots Tag Directives in Robots.txt:

In 2019, Google has officially announced that it never supports robots tag directives like index, noindex, follow, and nofollow in robots.txt.

So, don’t include these directives in the robots.txt file.

Never Block the Whole Website from Blocking:

When a site moves to a live server, robots directives can be accidentally left in place when used in a staging environment.

Make sure any robots directives that are in place are correct before moving a site from a staging to a live environment.

Similarly, accidentally exclude the whole website from indexing by noindex directives in the x-robots tag or robots meta tag.

Never Remove Pages With a Noindex Directive From Sitemaps:

@nishanthstephen generally anything you put in a sitemap will be picked up sooner

— Gary 鯨理/경리 Illyes (@methode) October 13, 2015

When a web page is tagged as a noindex directive, never remove it from the sitemap immediately.

As the web pages in the XML sitemap will be given high priority in crawling and obeying the robots tag instruction.

So, removing the web page from the XML sitemap before it gets deindexed can prolong the process.

Conclusion:

Robots meta tag has a huge role in instructing any web page to either index, noindex, follow, or nofollow the link equity between the web pages.

As an SEO expert, or SEO servicing company, you should be clear with the pros and cons of the meta robots tag.