Updated on April-2024

Every SEO professional and website owner knows that technical SEO is one of the main pillars for increasing organic visibility.

But why many websites couldn’t lead the show in SERP? The only reason is they don’t use a proven technical SEO checklist.

A checklist contains a list of attributes to check while performing the SEO process. You can either build a template as we have one or hire us for the best technical SEO services.

The checklist brings assurance on following the webmaster guidelines and accomplishing the process with quality.

Our technical SEO checklist is based on what we do daily for ourselves and our clients. Let us get started!

What Is the Technical SEO Checklist?

A technical SEO checklist is a preformatted list of the elements used to process the complete technical SEO of a website. These checklists are also used for technical SEO auditing and fixing errors.

Websites with specific technical attributes receive preferential consideration in search engine results.

Technical SEO ensures your website has better crawl accessibility, site architecture, quick loading time, page responsiveness, etc.

Below is a list of crucial actions you may take to ensure your technical SEO performance.

Technical SEO checklists are given below. It is always good to run a technical SEO audit of your website.

Why Do You Need Technical SEO Checklist?

Although on-page SEO, off-page, and content are important, website owners often disregard technical SEO because it sounds “complex.”

Technical SEO checklists are an important integral part of technical SEO practice.

When the destination is too far, you should split the journey by various milestones.

The same applies to performing optimization on any web page’s technical stuff. Technical SEO focus on crawl accessibility and user experience.

Besides these two pillars, there are about 30+ checklists (milestones).

When a website owner or SEO professional understands and is clear with the landmarks. The journey becomes simple, and the destination is achievable.

Whenever you work on any part or 360 degrees of SEO, you should have a checklist that helps your process smarter, with results as your client’s requirement.

Need Comprehensive Technical SEO Services or Audit?

Proven Technical SEO Checklist for 2024:

Our Technical SEO checklist for better crawl accessibility and user experience are,

- Google Search Console & Bing Webmaster tool Setup

- Crawl Errors

- Index Coverage Report

- Plan a Site Architecture with Mind Mapping

- Make sure you use optimized Robots.txt

- Make sure your website has a Sitemap.xml

- SEO Web Hosting

- Website Loading Speed

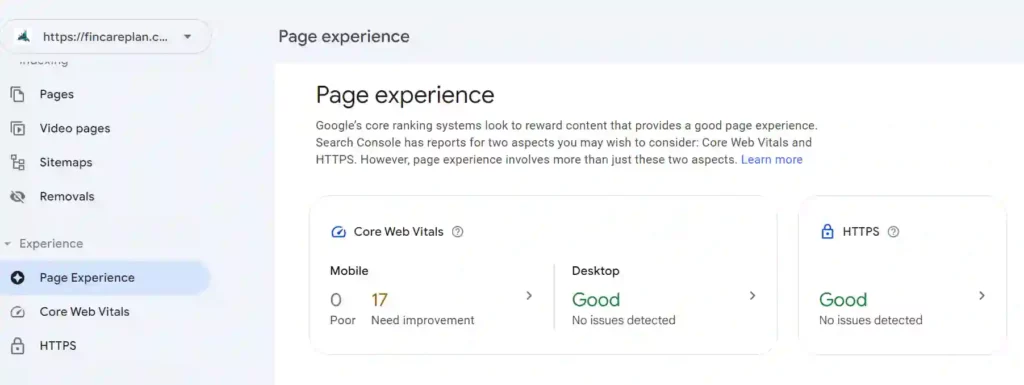

- Make sure 100% of your web pages are clear Page Experience (Mobile & Desktop)

- Core Web Vitals

- Mobile Friendliness

- HTTPS

- Safe browsing

- Intrusive Interstitials

- Broken Links

- Server Errors

- Canonical Tag or URL

- Structured Data (Schema Markup)

- Breadcrumbs

- Hreflang Tag

Setup Google Search Console and Bing Webmaster Tool

Crawl Errors

The first step you should do while working on technical SEO or a technical audit is to check the crawl errors.

You would know how search engines work – Crawling, rendering, indexing, and ranking. The basis work relies on crawling, without which indexing and ranking are parked at the moon.

When a search engine fails to execute the crawling process of a web page, it ends up with a crawl error. The reasons could be due to,

- Blocked by Robots.txt

- A website without a sitemap or proper internal linking structure

- Heavy redirect loop

- Internal server issues like 4xx

- External server issues 5xx

The search engines will attempt to visit each page of your website using a bot during a process known as crawling.

When a search engine bot follows a link to your website, it begins to look for all of your public pages.

The bot indexes all the content for use in Google while crawling the pages, and it also adds all the links to the list of pages it still needs to scan.

Ensuring that the search engine bot can access every page on your website is your major objective as a website owner. A crawling error indicates that Google had trouble examining the pages of your website.

This can harm SEO marketing by damaging the indexing and ranking of the resource pages.

These problems are easily located in the Coverage report of the Google Search Console.

The primary step in technical SEO checklist is to open,

- Google Search

- Console Webmaster tool.

These two are free technical SEO tool that help to diagnose and report the technical issues of every web pages.

These tools provide the following technical reports:

- Coverage Issues

- Page Experience and Core Web Vital reports

- Mobile Usability Reports

- Crawl Stats

- Removal Tool

- Sitemap

- Enhancements

When you could get the details of the errors or issues, you can act fast in fixing or hire a technical SEO company to fix the issues.

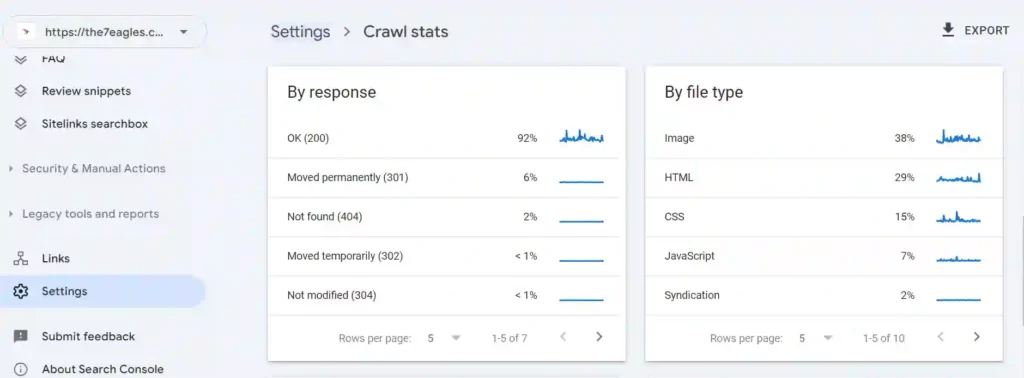

From the above image of the Google Search Console, you can get the details of the crawl status with various HTTP response codes.

- Ok (200) & other 2xx – Web Page can be index

- Not Found (404) – Page Not Found – Crawl Error

- 301 & 302 – Redirects – Redirect loops with more than 5 times are crawl errors

- Other 4xx – Crawl Error

- Server Errors 5xx – Crawl Errors

It’s time to act fast if you find any websites with crawl errors so that you can fix them at the earliest possible time.

The ship cannot sail until crawl errors are fixed.

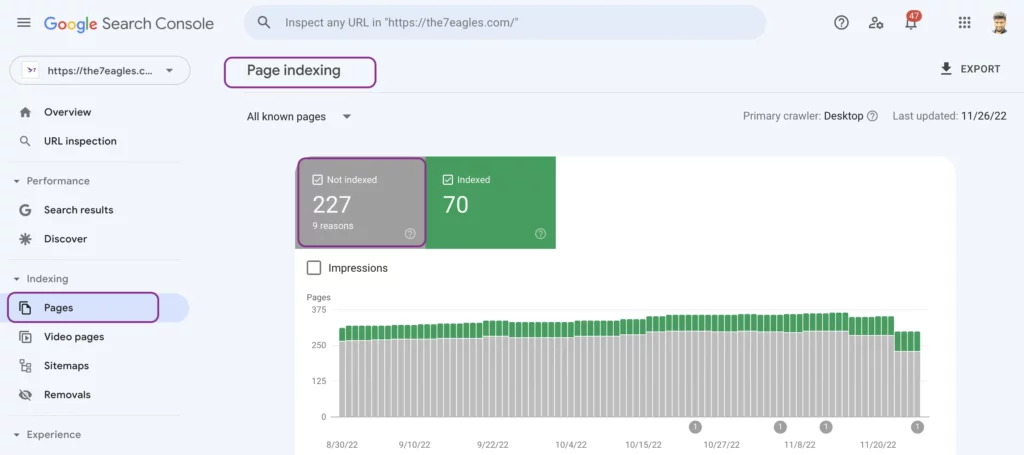

Google Index Coverage Report & Issues:

Google Index coverage reports are the main source of errors in crawl accessibility. These are issues that stop a web page from crawling and indexing.

When a web page is failed to crawl or index by the Googlebot, it is notified in the Google index coverage report in the Google search console.

Index coverage issues make a web page crawl priority to the bottom, affecting the website’s overall crawl budget.

So, all the errors under not indexed are part of the technical SEO checklist and should be addressed spontaneously.

When you see any errors or validate with warning or excluded, its time to look at the issue and the web page. The following are the Google index coverage issues.

Discovered - Currently not Indexed:

Discovered – currently not indexed is the condition when a Googlebot could discover a web page but couldn’t crawl the pages. The new URL discovery is routed by sitemap.xml or referring link.

This is one of the harmful coverage issues and needs to be fixed with immediate effect. The following conditions could cause it.

- Blocked by Robots.txt

- Server Overload

- An issue with Sitemap.xml

- Orphan Page

- Duplicate Page

- Low-Quality Page

You must fix the issues by finding the exact reasons listed above to make the page crawl and index.

Crawled - Currently not Indexed:

Crawled – currently not indexed is a coverage issue when a Googlebot discovers and crawls a web page but couldn’t index the page. The web spider could crawl the web pages by a proper sitemap.xml or referring link.

Every web page created by website owners is to rank in SERP and invade organic visitor. To rank, it should be indexed. So, this coverage issue should be addressed by technical SEO experts immediately.

Following conditions could cause it.

- Excluded by Robots HTML tag

- An issue with Sitemap.xml

- Duplicate Page

- Low-Quality Content

You must fix the issues by finding the exact reasons listed above to make the web page stored in the index database of search engines.

Not found (404):

When a web page returns with a response code 404, i.e., page not found, the coverage issue Not Found (404) appears.

When you find any potential page under the 404 HTTP response code, it’s time to resolve this.

If the page is not found yet the Googlebot crawls, you should check whether the URL has any referring pages.

If the 404 page has internal links directing to this page, remove them. This can save the crawl budget.

If this URL is in sitemap.xml by any means, remove them. Finally, tag that URL under HTTP 410, and let Google understand the page has gone.

This action will remove the web page from crawl priority.

Soft 404:

When a web page returns with 200 and 404 HTTP response codes together, it is known as Soft 404.

This type of HTTP status code confuses the search engine, as you’re confused now.

Search engines will harm your site’s crawling and indexing for such confusing web pages.

To fix soft 404, you should tag under 404 or 410 (content gone) if the page doesn’t exist anymore.

If you get a soft 404 error, even the page exists on the website. Then, submit the URL in the sitemap, followed by an increasing number of internal referring web pages and backlinks.

Soft 404 can occur if your content is thin. If you find so, fix your content.

This coverage issue occurs when a web page in sitemap.xml has a noindex robots tag directive.

If you find such URLs, that is to be noindex, then remove the web page from Sitemap.xml.

Suppose a potential page (you work for ranking) comes under this coverage issue. Then, check your robot’s tag or HTTP header by inspecting the page to find whether it is noindex or index.

If it’s marked as noindex, then change to the index.

Following this, do URL inspection in Google Search Console, followed by test live URL.

Request indexing if your page is available in Google post-test live URL. This can resolve when Googlebot crawls the submitted URL.

Check out the complete guide on fixing the submitted URL marked ‘noindex’

Page with Redirect:

Usually server has a feasibility of correcting small errors like,

https://yourdomain.com to https://yourdomain.com/

http to https, http://www to https, http://www to https://www

https//yourdomain.com/blog/page1 to https://yourdomain.com/blog/

If these kind of URL are marked under page with redirect, then you can be cool. As, this won’t hurt the SEO process.

But, when you find a web page you target to rank redirect to other destination, then it’s time to check and fix canonical URL of the page.

This Google index coverage issue states that the web page is excluded from indexing by the robots tag.

Every website owner looks to avoid some web pages to index in search engine databases.

This can be excluded by noindex in the robots HTML tag.

In this case, it is normal to see this excluded by the ‘noindex’ tag coverage issue.

If you see any page that is to be indexed and found under this coverage issue. Then, it’s time to tie your shoes.

To resolve this, change the robots tag from noindex to index.

Followed by manual indexing via Google Search Console.

At the same time, if you’ve tagged some pages under the noindex robots tag, then remove the URL both from the sitemap and crawl queue.

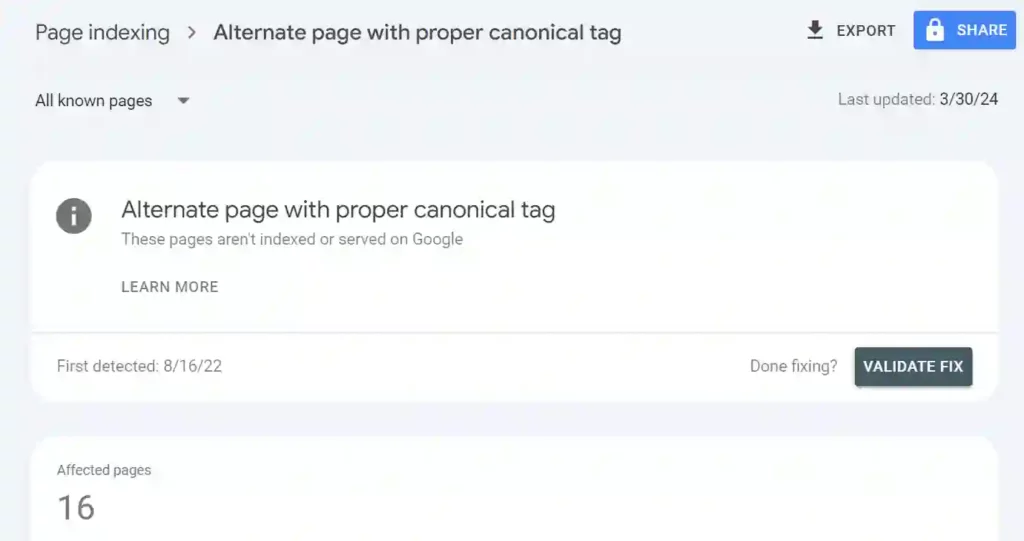

Alternate page with proper canonical tag:

This coverage issue happens when you have two pages of similar content, and Google takes only one web page as canonical.

So, the other web page becomes duplicate and excluded from indexing stated by Alternate page with proper canonical tag.

In this case, you must validate whether the web page mapped under is potential. If so, use URL inspection to check the canonical tag assigned by Google.

You can change the canonical tag (<link rel=”canonical”) in the header of the HTML code.

If duplicate content is mapped as a canonical page, remove the duplicate webpage URL from the sitemap, and submit the original URL.

Also, change the canonical URL for the duplicate page, and redirect the duplicate page to the original one.

When you submit a web page in Google without a canonical tag, it consider as duplicate content.

Also, the web page will be excluded from indexing. This coverage issue is duplicate, submitted URL not selected as canonical.

Under this situation, the best way is to provide the proper canonical tag for the web page

This coverage issue is similar to the above condition, Duplicate, submitted URL not selected as canonical.

The only difference here is that Google doesn’t consider the canonical tag you provide as the “canonical.”

To fix this, give the proper canonical URL to the web page you need to index and ensure you add the web page to the sitemap.xml.

If the Google selected, Canonical URL is not original, assign the real canonical for the page.

Then redirect the page to the original page, remove it from the crawl queue and the sitemap.

We have already seen a 4xx issue – Not Found 404.

Any web page returns with a response code 4xx, apart from 404, then the web pages are excluded under blocked due to other 4xx issues.

Here are the following other 4xx errors:

- 400 – Bad Request

- 401 – Unauthorized

- 403 – Forbidden

- 410 – The page has gone (Content gone)

- 429 – Too Many requests

If your web page gets any response codes, the corresponding page will never be indexed.

So, fix the page to get response HTTP code 200.

The web pages that are blocked by the command of robots.txt are excluded from coverage from search engines.

If you find any web pages excluded by this coverage issue, that you need to index, then you should use robots.txt tester to find whether it blocks the web page.

If robots.txt blocks the web page from crawling, then you should optimize the robots.txt.

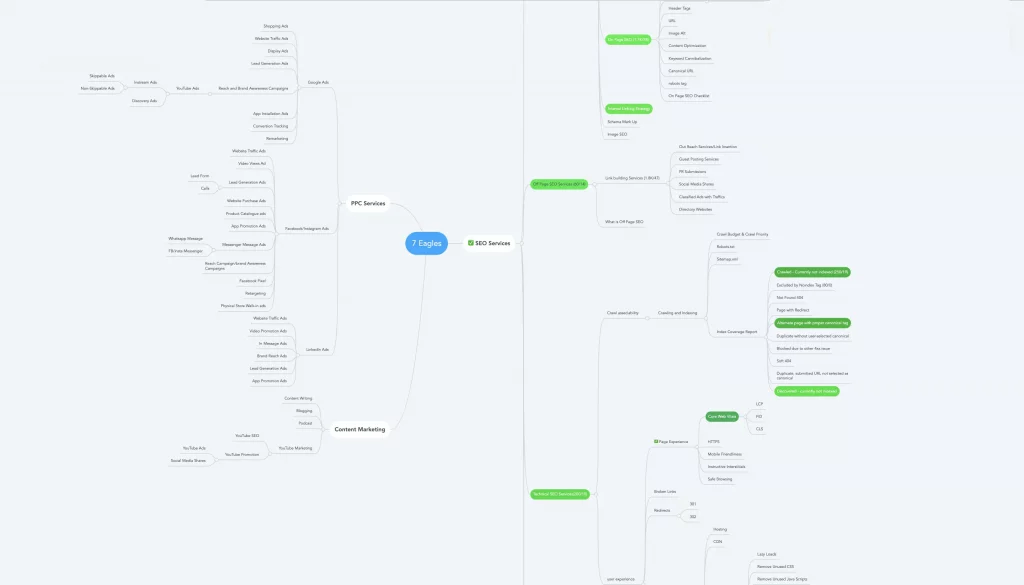

Plan a Site Architecture with Mind Mapping

Site architecture is a key area of technical SEO. You can plan your site architecture using a mindmapping tool as the above image.

Site architecture helps in building a strong internal linking between web pages.

Site architecture helps in

- New URL discovery by crawlers

- Increases crawling and crawl depth

- Helps in forming Topical authority contents

- Helps in creating more cluster pages

- Increases navigation

- Improves dwell time and reduces bounce rate

- It helps in avoiding keyword cannibalization

Robots.txt

Robots.txt is one of the prime technical SEO checklists. Optimized robots.txt helps in managing the crawl budget in a better way.

Crawl budget comes forth more than anything when you have a website with 10000+ pages.

Robots.txt is a text file that has to be installed on the root domain. It commands the crawlers on the web pages to crawl and avoid.

In fact, robots.txt commands what the bots are allowed to crawl the web page.

It would be best if you optimized it, as it has a huge role in crawling and managing the crawl budget.

Here are a few things you should always look at the following:

- It would help if you had sitemap.xml in robots.txt.

- You should never leave disallow command only ending with / (slash). It might prevent the crawler from crawling the complete website.

- Never use special character apart from $ and *.

- Don’t keep the user agent empty.

- You should never use upper case in robots.txt.

There are many streamlines you should look at while optimizing robots.txt. Checkout our complete guide on Robots.txt.

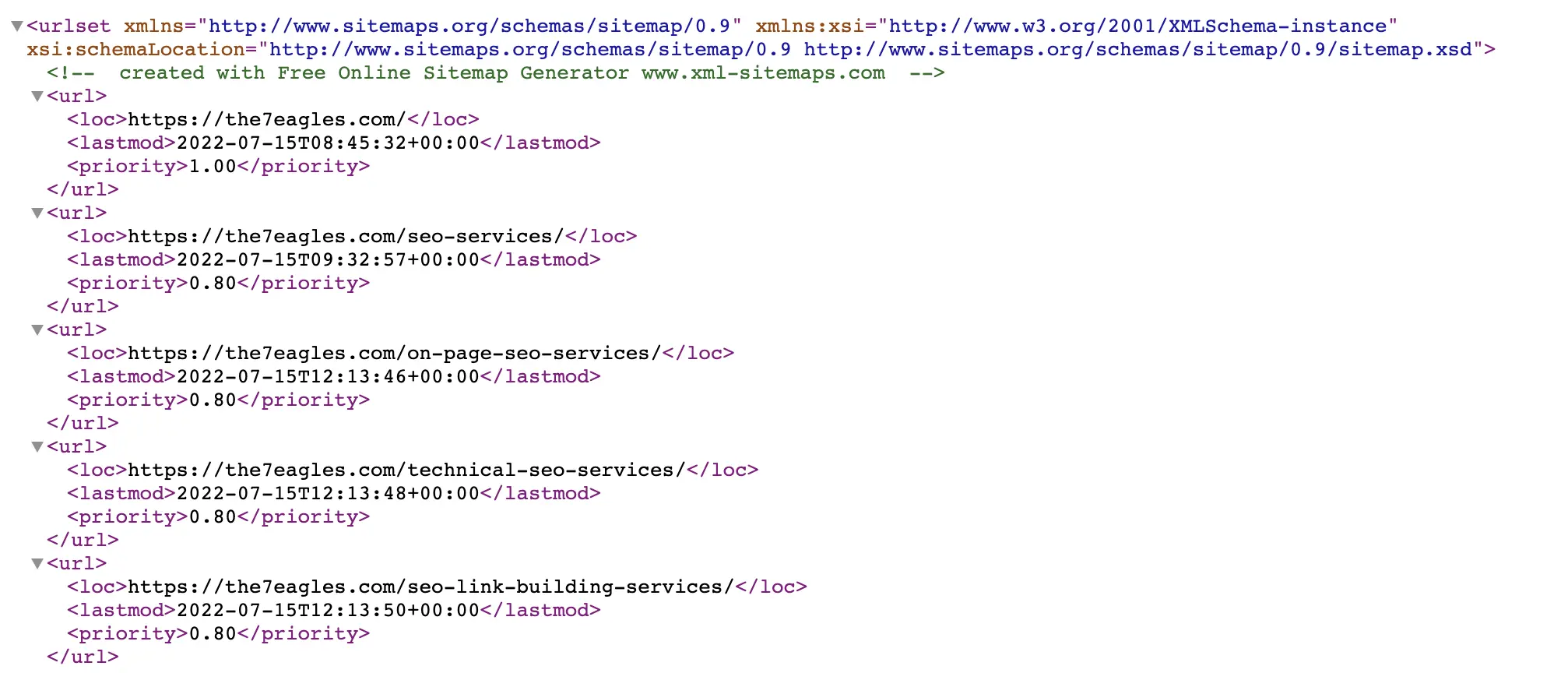

Sitemap.xml

Sitemap.xml is another important technical SEO checklist that helps crawlers discover every web page.

There are two types of sitemaps. One ends with extensions “.html,” and other “.xml.”

The sitemap.xml is accessible by search engines, while sitemap.html is for humans.

Sitemap.xml is a collection of all website URLs that are intended to index and rank. URLs submitted in the sitemap have a high priority of crawling.

If your website doesn’t have a sitemap.xml, you can generate it through sitemap generator.

Sitemap.xml looks as follows:

The attributes to look at sitemap.xml are the following:

<loc> – Location of the Web page

<lastmod> – Last time sitemap read the web pages

<changefreq> – Frequency at which sitemap reads a web page

<priority> – Hierarchy of web pages in website based on importance

To perform best practices on sitemap.xml, do check out our complete guide on sitemap.xml.

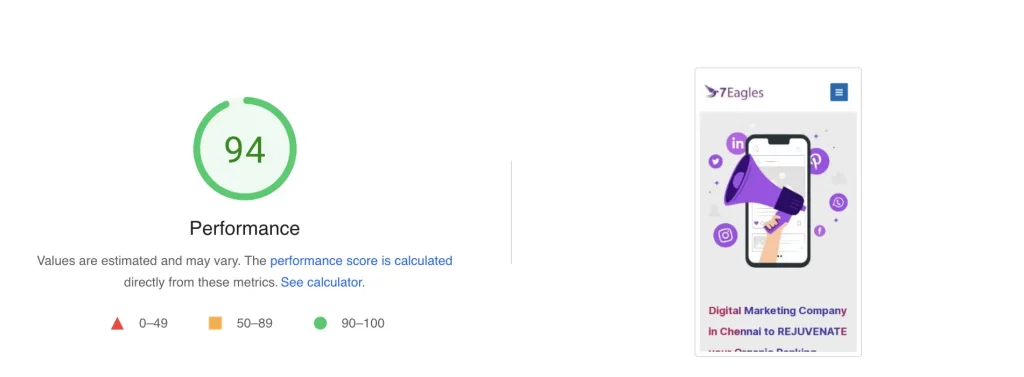

Website Loading Speed:

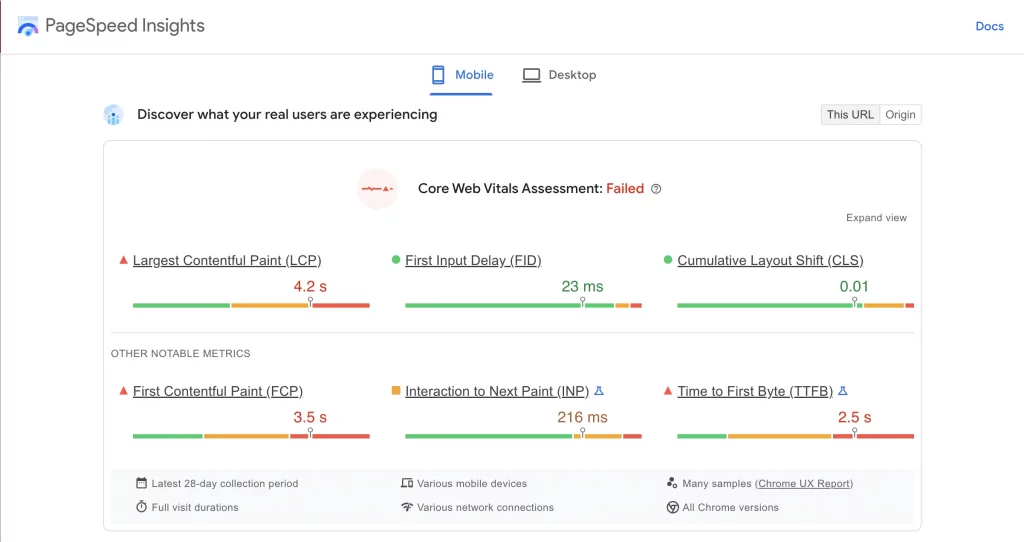

Site loading speed is one of the ranking factors. Mobile speed should be one of the technical SEO checklists.

A website that loads more than 2.5 seconds on mobile loses its ranking and increases its bounce rate.

A page speed insight test can evaluate website loading speed performance.

A mobile speed performance score of 90+ is good and has higher ranking calibration.

The other metrics we can get are our Core Web Vitals, Speed index, total blocking time, time to interact, etc.

Our checklist holds the reason that contributes to high loading speed time. They can be

- bulky images without proper dimension,

- unused CSS files,

- unused JavaScript files,

- rendering blocking resources,

- DOM size,

- not using Lazy load for images,

- CSS files too large,

- JavaScript files too large.

Google Page Experience

Page experience was introduced by Google in August, 2021 for mobile and in February, 2022 for desktop.

This evaluates the real world user experience of a web page by five parameters:

- Core Web Vitals

- Mobile Usability

- HTTPS

- Safe browsing

- Intrusive Interstitials

This acts as a tie-breaker while deciding the ranking. So, every page should pass Google page experience to rank in 1st page of search engine.

Core Web Vitals

A good user experience is offered by the page, which emphasizes loading, interactivity, and visual stability:

- Largest Contentful Paint (LCP): Evaluates the efficiency of loading. To give users a positive browsing experience, LCP should happen within the first 2.5 seconds of the page loading.

- First Input Delay (FID): Measures interactivity. Aim for an FID of under 100 milliseconds to offer a positive user experience.

- Cummulative Layout Shift (CLS): Calculates visual stability. Aim for a CLS score of less than 0.1 to offer a positive user experience.

These are the metrics of Core Web Vitals that calculate how best a web page is responsive to users.

Mobile Usability:

- A website isn’t only made smaller to fit different devices as part of mobile usability. It involves being aware of how people use their mobile devices and realizing that each user’s mobile experience differs.

- For mobile devices, user experience is as much about how something feels as it is about how it looks and functions.

- Instead of the conventional desktop proxies of a mouse and keyboard, people interact with the screen with their fingertips, or more precisely and frequently, their thumbs.

- This means that mobile design is significantly more tactile compared to desktop design.

- Users experience it in addition to seeing it.

For better or worse, the barrier posed by desktop proxies is no longer there, and mobile designers must now consider a new set of usability-focused UX design principles.

HTTPS

HTTPS is the secured protocol, a ranking factor that ensures a great user experience.

The webpage is delivered via HTTPS. Verify the security of the connection to your site. Several browsers may experience the HTTPS problem, which prevents HTTPS website pages from opening. You should consider switching browsers, though, as this mistake manifests more in Google Chrome.

If SSL/HTTPS is not functioning properly on your website, there may be several causes.

You can avoid SSL problems on your website by being aware of their root causes.

Please investigate whether one of the below causes can assist in addressing your problem.

- Is the hosting of your domain new?

- Has the certificate reached its expiration date?

- Did you configure the HTTPS redirect?

- Is the content on your website inconsistent?

- Use our name servers, do you?

- You may have set up a conflicting DNS record.

Safe Browsing:

Google looks at safe browsing as a ranking factor and an important technical SEO checklist.

Safe browsing enables client apps to check URLs against regularly updated lists of dangerous web pages.

Social engineering websites, phishing and deceiving websites, and websites that host malware or unwanted software are a few examples of hazardous web resources. Come and see what is attainable.

Safe browsing enables you to:

- Examine pages for compliance with our Safe Browsing lists depending on platform and danger categories.

- Users should be warned before clicking any links on your website that could take them to compromised pages.

- Stop people from publishing links to pages known to be contaminated on your website.

The Safe Browsing API is solely intended for personal usage. If you need to use APIs to identify fraudulent URLs for business, that is, for selling things or making money, then do so.

Intrusive Interstitials:

- Page components that impede users’ views of the content, typically for promotional purposes, include intrusive interstitials and dialogues.

- Interstitials are overlays that cover the entire page, whereas dialogues cover a portion of the page and can obfuscate the underlying text.

- For various reasons, websites frequently need to display dialogues; however, annoying users with interstitials can make them angry and reduce their faith in your website.

- Interstitials and intrusive dialogue boxes make it difficult for Google and other search engines to interpret your content, which could result in subpar search results.

- Users are less likely to return to your website through search engines if they find it difficult to use.

Fixing Broken Links

When you click a link and get a response as 404 page not found, then the link is broken link.

Examine and fix broken links are an important technical SEO checklist, as it could improve your crawl accessibility and web page loading speed.

A broken link is a web page that, for various reasons, a user cannot locate or access.

The servers produce an error message when a user wants to open a broken link. Dead links and link rots are other terms for broken links.

You can use deadlink checker to diagnose all broken links of the website or web page.

Before creating user engaging contents, you should always fix broken link issues to have in-depth crawling, and better crawl budget management.

There are numerous causes for broken links, including:

- The URL entered by the website’s owner is in error misspelled, mistyped, etc.

- Your website’s URL structure permalinks recently changed without a redirect, which resulted in a 404 error.

- The external site has either been transferred permanently unavailable or both.

- Links to previously moved or removed content PDF, Google Doc, video, etc.

- Page components that are broken HTML, CSS, Javascript, or CMS plugins interference.

- Access from the outside is prohibited by a firewall or geographical restriction.

Fixing External Server Errors (5xx):

Never underestimate when your web pages are affected with external server errors 5xx.

This technical SEO checklist is one of the deadliest issue among technical stuff a website/web page.

- Any number of factors, such as uploading the wrong file or a fault in the code, can result in a server error. This error message is a “catch-all” general response.

- The web server is alerting you to a problem but is unsure of what exactly went wrong.

- If you have root access, you should check the error logs on your web server to learn more about this.

- If you are utilizing a shared hosting package, get in touch with your host to learn more.

- The server and what is currently operating determine the best strategy to troubleshoot the error.

- An internal server error typically indicates that some components of your web server are not configured properly or that the application is attempting to perform an action, but the server is unable to do so because of a conflict or restriction.

- Only software updates for the Web server can cure this issue.

- The Web server site administrators are responsible for finding and analyzing the logs, which should provide more details about the error.

Assign Proper Canonical Tag to Web Pages

- A piece of HTML code called a canonical tag (rel=”canonical”) designates the primary version for duplicate, nearly identical, and similar pages.

- Auditing every web page on its canonical tag is one of the common technical SEO checklist

- In other words, you can use canonical tags to define which version is the original or master web page to indexed if you have the same or comparable material available under several URLs.

- Duplicate content is not acceptable to Google. As a result, Google can end up wasting time crawling numerous copies of the same page rather than finding other valuable information on your website.

The fundamentals of using canonical tags:

- Utilize absolute URLs.

- Make URLs in lowercase.

- Use self-referential canonical tags.

- The appropriate domain version (HTTPS rather than HTTP).

- Employ a single canonical tag per page.

There are five recognized methods for defining canonical URLs. The following are examples of canonicalization signals:

- The (rel=canonical) HTML tag

- Internal links

- HTTP header

- Sitemap

- 301 redirect

The categories that Google utilizes in the Index Coverage Status Report for canonical URLs in Google Search Console are as follows:

- Alternate Page with Proper Canonical Tag.

- Duplicate, Submitted URL not selected as canonical.

- Duplicate without user selected canonical.

- Duplicate, Google chose different canonical than user.

Implementing Structured Data:

- Structured data is a standardized format for describing a page’s content and categorizing it.

- It get the place in zeroth position of search engine result page, and invades more traffic. So, it’s a must checklist for technical optimization of web pages

- For instance, on a recipe page, structured data might include information about the ingredients, the cooking time and temperature, the calories, and so forth.

- To comprehend the website’s content and learn more about the web and the wider world, Google employs structured data that it discovers on the internet.

- The majority of Search structured data uses the schema.org vocabulary, but for information about Google Search behavior, you should rely on the Google Search Central documentation rather than the schema.org definition.

- More properties and objects can be found on schema.org that are not required by Google Search but may still be beneficial for other platforms, tools, and services.

- To check on the health of your pages, which could break after deployment due to serving or templating difficulties, be sure to test your structured data using the Rich Results and snippets.

- An object must have all the necessary attributes in order to be eligible for enhanced presentation in Google Search.

- Generally speaking, adding more suggested features can increase the likelihood that your material will show up in search results with an improved CTR (Click Through Rate).

Breadcrumbs:

An alternative navigation method known as a “breadcrumb” displays the user’s location within a website or Web application.

Breadcrumbs Types:

1. Geographic-based:

- The user may see where they are in the website’s hierarchy using location-based breadcrumbs.

- They are frequently employed in navigation plans with many levels usually more than two levels.

2. Based on attributes:

- The attributes of a certain page are shown in attribute-based breadcrumb trails.

3. Path-based:

- Users may see the steps they took to get to a specific page thanks to path-based breadcrumb trails.

- Because they show the pages the visitor has visited before arriving at the current page, path-based breadcrumbs are dynamic.

Hreflang Tags:

- An HTML feature called Hreflang is used to specify the language and location of a webpage. Hreflang SEO is just one of the steps you’ll need to do to create a multilingual website.

- The hreflang tag informs Google which versions of your website are best appropriate for particular search terms.

- Additionally, it tells Google how highly to place these pages in the search results.

- The hreflang tag can be used to inform search engines like Google about translations of the same page that exist in several languages which help the users to provide the right versions.

Conclusion:

- Technical SEO checklist is a to-do list that can make your crawl accessibility and user experience a greater one.

- The goal of technical SEO is to have complete crawling of web pages, managing crawl budget, and fast website loading speed.

- This can be achievable by our 30+ proven technical SEO checklist.

- It’s likely that your website, regardless of how old it is or how new it is, has some technical SEO difficulties that could hurt its ranks.

- The good news is that the majority of technical SEO concerns can be resolved with a short upgrade, allowing you to reap the rewards as soon as feasible.

- To find any technical problems with your site, your best plan of action is to acquire a technical SEO audit by professionals like 7 Eagles

- Hope you have understood better on the checklist for technical optimization of your web pages.

- If you still require an expert team to rejuvenate your website performance, do contact us anytime.

- We appreciate you to checkout our complete process of technical SEO services.

Frequently Asked Questions

Technical SEO involves optimizing website structure for better search engine visibility. It includes improving site speed, ensuring mobile compatibility, fixing crawl errors, and organizing content logically. Implementing HTTPS, structured data, and proper URL structures are crucial. It helps websites rank higher.

To audit technical SEO, start by checking website speed, mobile-friendliness, and crawlability. Look for broken links, duplicate content, and XML sitemap issues. Assess site architecture, HTTPS implementation, and structured data markup. Utilize tools like Google Search Console and website crawlers to identify and fix any issues.

Technical SEO can feel difficult at first because it involves understanding website stuff like coding and how search engines work. But with some learning and practice, it becomes easier.

Technical SEO does not always require coding skills, but having some basic understanding can be helpful for tasks like fixing website errors or implementing structured data. However, many aspects of technical SEO can be managed without coding knowledge using various SEO tools.

The time it takes to conduct a technical SEO audit depends on factors like the size and complexity of the website, the thoroughness of the audit, and the tools used. It can range from a few hours for smaller websites to several days or even weeks for larger, more complex ones.