Mastering all the fundamentals of SEO helps you becoming an expert or grow your website with organic traffic loads. In such case, you should understand the complete guide on technical SEO.

Most experts say, Don’t optimize for search engines, optimize for users. But how does a web page rank, if they are not crawled by search engines?

Start with the above question, as you’re going to learn the complete concept behind the technical robustness of a website, and it’s role in SEO.

What Is Technical SEO?

The process of optimizing the technical components of the website that requires webmaster skills are known as technical SEO.

It starts from working on codes, XML sitemap, robots.txt for crawling, indexing, and rendering to hosting and page experience.

The basics of SEO starts with crawl accessibility, and then the keyword and SEO-optimized or helpful content are important. In recent years, Google is estimating real-world user experience as one of the top ranking factors.

Thus, from 2021, SEO world has seen updates like Core Web Vitals, and Page experience for mobile and desktop.

Even in the recent core updates happened in May and September 2022, the prime focus was provided to usability of web pages.

Technical SEO Importance

You can never be a pro SEO professional or agency without developing the core skills to work with technical components of website.

Even as a business owner, you should be careful on choosing an SEO agency like us who have vast knowledge and experience in technical aspect of a website.

Before moving forward, you should split technical stuff behind SEO into

- crawl accessibility,

- user or page experience.

Here are few importance of technical SEO that you should employ in your website today.

- You can have great content, quality backlinks. But, if you’re page is not crawlable or indexable by search engine, it’s just a futile web page on internet.

- To optimize crawling, you should understand the concept of optimizing robots.txt, XML sitemap, and robots meta tag.

- No CMS plugins will help your web pages to recover from page indexing issues like crawled-currently not indexed, discovered – currently not indexed.

- Since mobilegeddon update on 2015, Google search engine perform mobile-first indexing. So, it’s mandatory for any web page to be mobile friendly (usability).

- Most of SEO professionals think, Google search console is to check organic traffic from Google. But, it’s one of the best technical SEO tools that provide viability on

- page indexing issues,

- mobile Usability Issues,

- page Experience and Core Web Vital status,

- rich results,

- manual actions and security issues.

- Mobile usability, HTTPS, Core Web Vitals are vital Google ranking factors.

- Since 2021, Google shares equal potential to usability of web pages with good content and authority backlinks.

- The faster the web page loads in mobile (less than 2.5 seconds), the greater the chance of ranking higher in SERP (search engine results page).

- Proper optimizing on structured data (schema markup) can get good results in SERP.

- We have seen a huge drop in Google organic traffic on the web pages that failed in Core Web Vitals.

Technical SEO Guide

To know more on best practice to optimize the technical components, we have decoded into various chapters for better and detailed understanding.

1. How does search engines work?

This is the first chapter, and this deals with the concept of how Google search crawlers work. You would know crawling, indexing, and ranking are the three works of search engines. But, you should understand other works like URL discovery and rendering. This chapter elaborates the reasons and ways to fix the barriers of crawling and indexing.

2. What are crawl errors?

This chapter deals with the possible crawl errors that could cause web pages excluded from either crawling or indexing. These errors can cause due to URL, DNS, server, and robots.txt.

3. Google Coverage/Page Indexing Issues

Google Search Console updates both indexed and not indexed web pages under the section Page Indexing (earlier it was coverage). When a web page is under Indexed, there is no issues.

But, when any potential is affected under not indexed, it's necessary to fix the issues.

To fix the page indexing issues, you should have complete knowledge on these issues and fix them.

4. Role of XML Sitemap

Sitemap.xml is the collection of URLs of all the web pages in a website that are meant to be indexed. XML sitemap are important tool in crawl management.

Crawlers like Google bot discovers a new URL in a web page with the help of XML sitemap. The chance of web pages to index becomes higher when they are present in sitemap.xml with robots tag attribute "index."

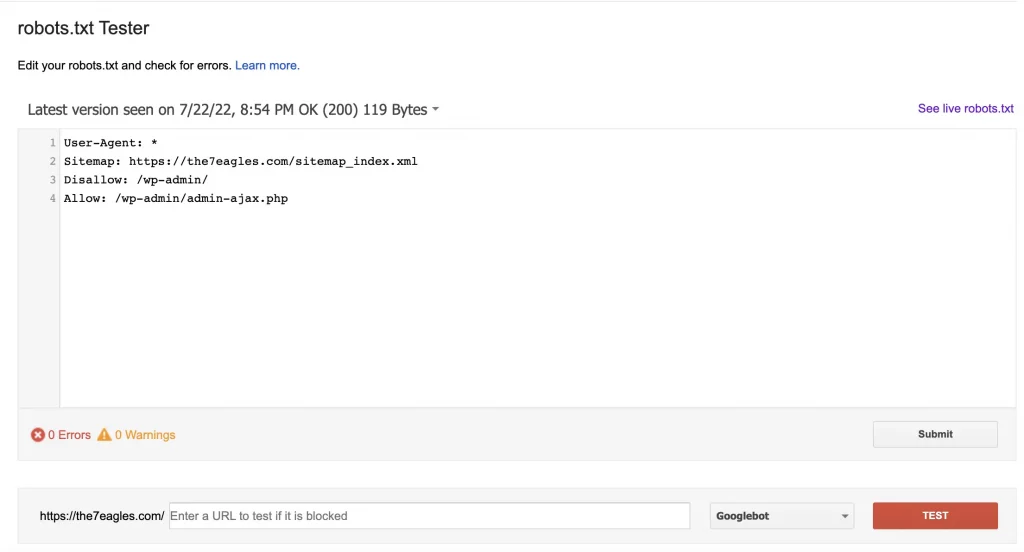

5. Importance of Robots.txt in Crawl Management

Another important technical component for crawl management is robots.txt. It is just a text file stored as the base file of the website. They provide the command for the crawlers like which web pages to crawl or exclude. There are important attributes like user-agent, disallow, allow, and XML sitemap.

There is a huge gap in optimizing the robots.txt in maximum websites. Any website with more than 10000 web pages should get complete knowledge on robots.txt.

6. Why you need to optimize meta robots tag and X-robots tag

Robots Meta Tag is a HTML component that provides command to the crawlers on the status of indexing, and following the links present in a web page. Whereas X-Robots tag present in the header can stop the whole website from indexing and stop following the links.

It's important to understand the key concepts of how to use both robots meta tag and X-robots tag in order to manage crawl budget and to rank the potential web pages for maximum organic visibility.

7. Role of canonical URL in indexing a web page

Canonical Tag or URL is an important concept in SEO, as search engines always inspect and index the canonical version of the web page.

If you have gone through the chapter 1— How does search engines work, you would learn the role of how search engine index a page understanding the canonical URL.

At the same time, there are 5 covereage issues related to canonical URL that a web page can be excluded from indexing

8. How to Choose SEO-Friendly Web Hosting

Hosting has a huge role in clearing the core web vital metrics such as LCP, FCP, TTFB, TTI, etc. It's better to choose a hosting that has the benefits to provide better user experience.

A website with greater performance need to be hosted in dedicated servers with ample of storage to handle the bandwith of the traffic. We would recommend to switch to dedicated cloud servers with 99.9% uptime, and 24/7 support with complete security features.

9. Details of HTTP Status Code

Understanding HTTP status codes is vital to improving your technical SEO skills. HTTP response status is the code sent by the server whenever a request is sent by the user from the browser.

There are 5 types of broad HTTP status code which includes 69 individual status codes. They are 1xx, 2xx, 3xx,, 4xx, 5xx. You need not to know all 69 status codes, but there are around 12 status codes that Google supports, which you should know completely.

10. Understand the concept of Redirects

Redirects can both harm and assist the SEO process. The impact highly depends on how it is used. Multiple redirects in a website can affect loading speed, and sometimes can lead to redirect loops.

You should be clear on redirecting any deleted or moved web pages to the new destination and make sure no internal links has redirection.

Redirection is fine if it's used to transfer the authority created on the old page to the new page. Again, be cautious about avoiding multiple redirects.

11. Understand Broken Links and 404 Not Found

Broken links affect user experience and could lead the user to quit the web page and increase the bounce rate. Broken links may lead to 404 Not found HTTP status.

Fix the broken links— If the web page removed or moved to new page, redirect the old address to new destination, or remove the broken link from internal webpages to avoid more 404 hits

12. Google Page Experience Update

Google page experience was to focus on real-world user experience by validating 5 factors like core web vitals, mobile usability, HTTPS, safe browsing, and intrusive interstitials for both mobile and desktop.

As Google ranking factors gives more importance to usability of web pages, Improving page experience for mobile and desktop can improve organic visibility of the web pages with helpful and relevant contents.

13. Optimize to Pass Core Web Vitals

Since June 2021, Core web vitals has become a strong ranking factor acted more than a tri-breaker. Core web vitals is both lab and field data on web performance.

The most important web vitals are Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS). The other metrics that has part on web performance are First Contentful Paint (FCP), Time to First Byte (TTFB), Time to Interactive (TTI), Total Blocking Time (TBT), Interactive Next Paint (INP), and Speed Index.

Learn the complete concept of Core web vitals, the reasons behind poor metrics, and ways to improve them to dominate SERP.

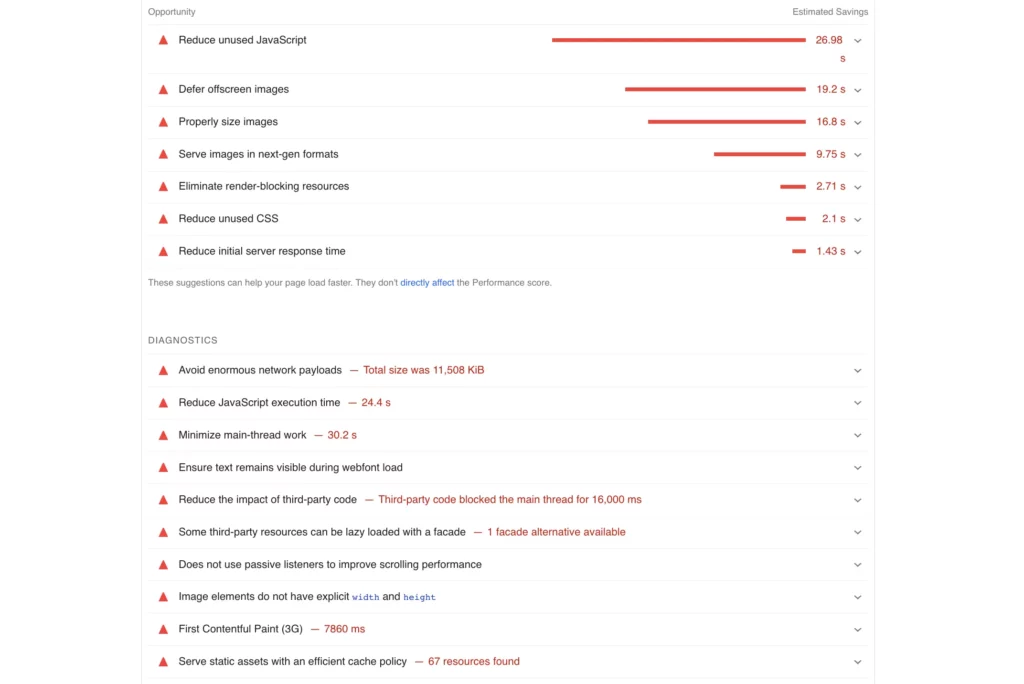

14. How to Optimize Website Speed

Website speed optimization is the process of optimizing the critical and non-critical rendering resources, images, and other multimedia to response to the user within 2.5 seconds (web loading speed).

Using minimal resources like plugins, light-weight themes, high speed servers, CDN, minimizing JavaScript, and critical CSS, and removing unused CSS, can help your website to load as fast as less than 2.5 seconds.

15. Mobile Usability - A Ranking Factor

Mobile usability is another important SEO factor since mobilegeddon update. Since, more than 70% of worldwide search happens in mobile device, optimizing your website design UI/UX usable for mobile pages.

Rather than using a mouse or keyboard to navigate your website, users use their hands, so user interface and user experience are crucial.

Mobile phones are designed so that browsing the web is easier, so customers should only use websites with features that enhance this philosophy.

16. How to Make a Website Mobile Friendly

When a website is mobile-friendly, it increases the traffic for the website owner and the users, making it easier for them to use.

Increasingly, visitors use mobile devices, so your website must be friendly to all devices regardless of their operating system.

17. HTTPS - Important Technical Component

Hypertext trasfer protocol (HTTP) is the system that take care of the data transfer between a browser request, and the servers response.

When the protocol is unsecured, user fail to interact with the content of the web page, and it hurts the usability of web page. Securing the protocol with SSL (secure Socket Layer) can help increase user interaction with the web page.

A website with HTTPS protocol gets better ranking compared to website without a SSL certificate. HTTPS is a part of Page experience, and Google ranking factor.

Technical SEO Tools You Should Know

To make your practise ease, you should have ample experience and understanding on the technical SEO tools available in the market. Most tools I am going to discuss are free, yet will increase your skills by 5x when you use them holistic.

1. Google Search Console

In this list, Google search console comes as first choice of technical SEO tools. GSC helps you to get a 360 degree view on coverage/page indexing, Page Experience, enhancement (rich results) status and issues.

Apart from this, you can also check advanced details like crawl stats, manual actions, and security issues.

Under page indexing sections, you get two options—Indexed, and Not-Indexed. Indexed web pages don’t have coverage issues. In contrast, Not-indexed web pages would be affected by any coverage issues, you should check each issues, and the affected web pages.

Page Experience section provide you the visibility of the issues on core web vitals, HTTPS, mobile usability.

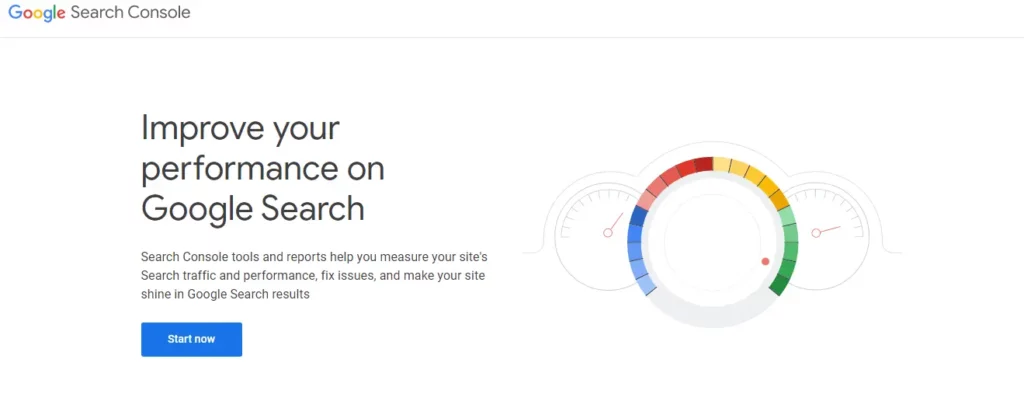

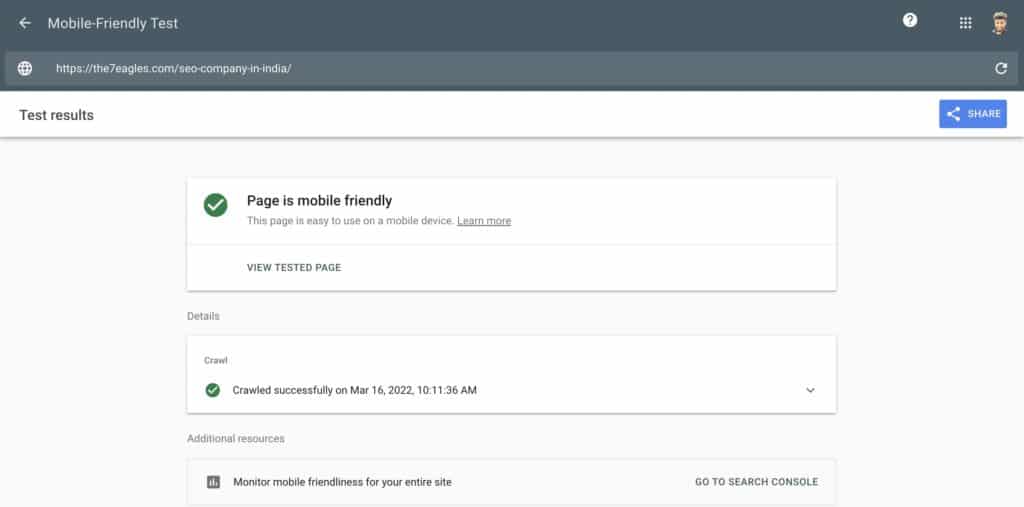

2. Mobile Usability Test

It is another free tool from Google to check whether your web page is mobile-friendly. It’s mandate to validate each and every web page whether they pass mobile usability.

As Google prefers mobile-first indexing, your web pages should be well optimizied for mobile screens.

3. Page Speed Insight

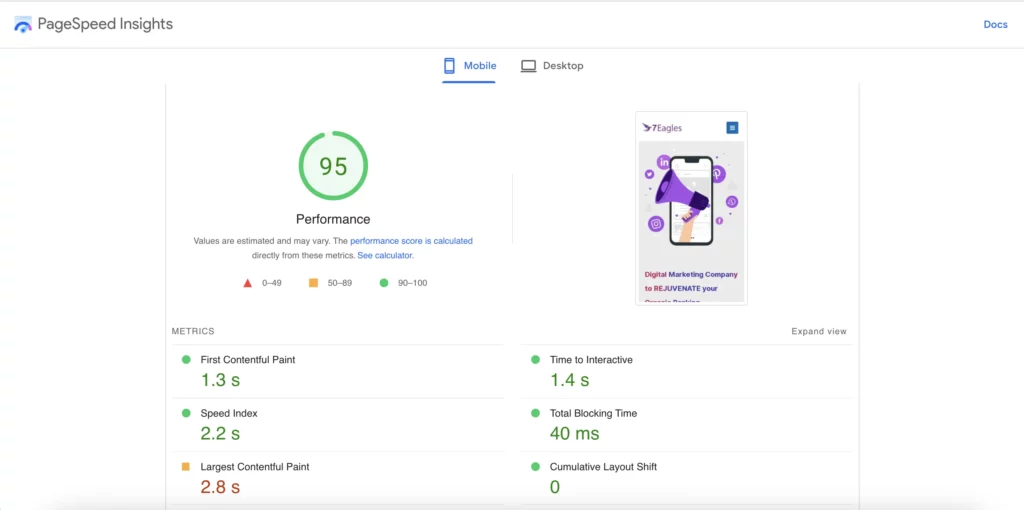

Page speed insight tool is used to analyze the lighthouse components— Core web vitals and other web vitals. When your website has ample of organic traffic, you get the details of both lab (chrome) and field (real-world) data.

Page Speed insight highlights the details like,

- Largest Contentful Paint (LCP)

- First Input Delay (FID)

- Cumulative Layout Shift (CLS)

- First Contentful Paint (FCP)

- Total Blocking Tine (TBT)

- Time to First Byte (TTFB)

- Speed Index

- Next Interactive Paint

- Time to Interactive.

Same time, you can get the details of issues, opportunities, and diagnostics for any poor web vitals.

With these details, it gives a clarity for developers and SEO experts to resolve the issues.

Technical SEO Best Practices

Here are the proven strategies you should deploy in daily activities to optimize all potential pages index, and pass core web vitals.

Technical SEO is basically of two types— Crawl accessibility and Page experience.

So, never complicate yourself on thinking too much or assuming technical is never an easy. Once you understand the concept, you can be a true SEO expert.

Start your practise with an Technical SEO Audit.

Technical SEO Audit:

Audit is the primary job an SEO expert/specialist/consultant should do. It helps you to understand the exact reasion, why your website are bleeding their organic traffic.

To do this, you should have a proper SOP or checklist. As these checklist makes your process simple, rapid to diagnose the issues, and fix them with immediate effect.

Your audit process should have the following,

- List of all URL (web pages) that should be indexed.

- Index Status

- Coverage Issues Status (if page is excluded from indexing).

- Check whether Robots.txt block the URl from crawling.

- Is the URL present in XML sitemap.

- Does Robots Tag exclude from indexing

- User-selected Canonical.

- Google-selected canonical.

- Is Google and User-selected canonical same?

- Internal Duplicate Content.

- Does the URL passed page experience.

- Does the web page passed Core Web Vitals?

- Is the web page mobile-friendly?

- Does the hosting has ample storage with 99.9% uptime?

- Do your website has cache system?

and many more.

If you need an expert team to assist you in auditing the technical components of your website, you can reach us for a comprehensive site audit with solutions.

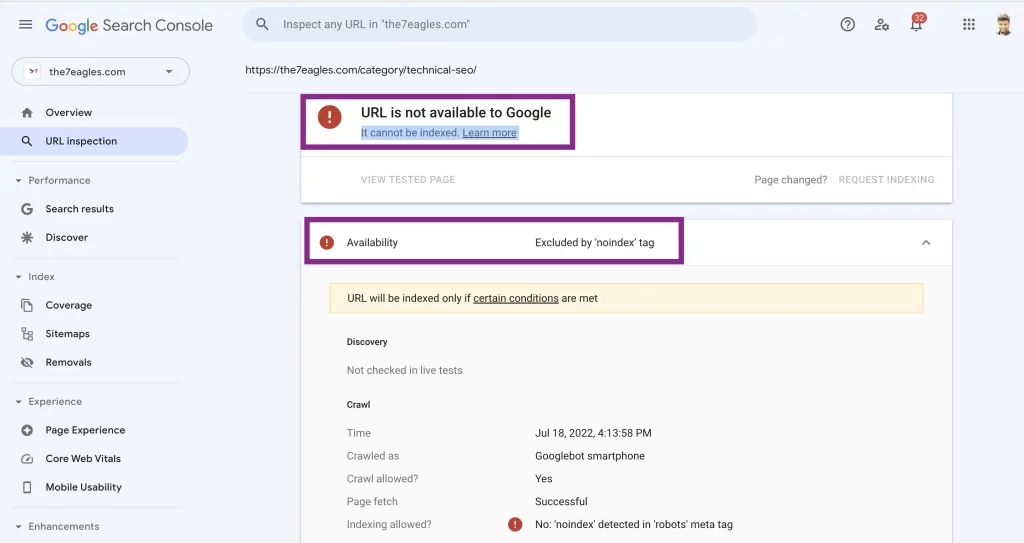

Try to Address Coverage Issues

Most important for any SEO effort is to get the webb page indexed. Because, without indexing, you can’t rank them.

To do this, you can use Google search console for URL inspection

Once you done so, you will get a detaied report containing,

- Last crawl date.

- Which bot crawled

- Crawl allowed (is robots.txt allow to crawl)

- Page Fetch (Doen DNS allows page fetch)

- Indexing allowed— Here you get the reason why the web page is not indexed.

- User-Declared canonical.

- Google selected Canonical.

Once you find the issue, resolve them using techniques we shared in Google index coverage issues article.

Check Whether Robots.txt Blocks Crawlers

You can use tools like Robots.txt Tester to find, whether the web page is crawlable by Google bots.

If a potential page is blocked by robots.txt, then you should fix the disallow directive of the robots.txt file.

Is the Web Page is found in XML Sitemap

XML sitemap helps crawlers to find new web pages, even if they are referred by internal or external web pages. So, its important for any web page to be in sitemap.xml.

Same time, a web page with robots tag “index”, and present in sitemap.xml get a sooner indexation compared to the web page that’s not found.

Is User-Declared and Google-selected Canonical Same?

This is the method to figure out to fix the duplicate content on the website. If Google doesn’t chose the user-declared, then it’s a signal of duplicate content.

If you have any duplicate content in the web page, it’s high time, you need to fix them.

Validate Whether a Web Page is Mobile Friendly

As discussed in the technical SEO tools sections, use mobile-friendly test to understand, whether a web page is mobile friendly.

If they are not mobile-friendly, here are few common reason,

- Clickable elements too closer together.

- Uses incompatible plugins.

- Viewport not set.

- Viewport not set to “device width”.

- Content wider than screen.

- Text too small to read.

Once you get the detail of the issue that cause your web page fail mobile friendly text, fix them according to the guidelines.

Fix Core Web Vitals

Core web vitals is an important SEO ranking factor, and Google provides a high attention towards usability of web pages in recent core updates.

Core web vitals is what now accounts to essential metrics to validate the website loading speed.

There are numerous components you need to fix to pass core web vitals and page experience.

- Use reliable cloud servers with maximum storage and 99.9% uptime.

- Install cache layers.

- Eliminate render-blocking resources.

- Remove unused CSS files.

- Minify JavaScript and CSS.

- Optimize images with less than 100 kb, and use them in new generation formats like webp.

- Use lazyload for images.

- Keep videos under 2MB, and serve in webm format.

- Reduce JavaScript execution tim

- Reduce unused JavaScript.

- Use proper dimensions for images, and other multimedia

- Use DOM size less than 800 nodes.

- Keep request counts low and transfer sizes small.

- Defer offscreen images

- Avoid enormous network payloads

- Reduce initial server response.

- Minimize main-thread work

- Ensure text remains visible during webfont load

- Reduce the impact of third-party code

Conclusion:

- Technical SEO is never an option, it’s always a need. The problem why most avoid is, the lack of knowledge.

- This article has illustrated A to Z of how to understand and perform technical components of the website.

- If you still couldn’t find a solution, book our technical SEO services.