SEO and search engines evolve every day to provide the best content over the Internet to users. In terms of SEO practice, a sitemap is not a new theory to teach, yet, there are few checklists and best practices that could ensure the process is towards the goal.

An XML sitemap is one of the primary KPIs (key performance indicator); when submitted in Google or Bing webmaster tool, it helps report tons of technical issues and the status.

There is a lot of misinformation over the industry, and we are here to clarify the myths. This article will simplify the role of sitemap and its importance in SEO practice.

What Is a Sitemap?

The sitemap contains a list of website URLs that should index.

It acts like a blueprint of the website and directs the search engine crawlers like Googlebot and Bing bots to discover and understand the web pages.

Websites sometimes have sitemap.xml and sitemap.html. Crawlers read the XML sitemap, and the HTML sitemap is for humans.

So, the HTML sitemap has no role in optimizing the website.

Back to sitemap.xml, it helps the crawlers discover, crawl, and index (the process of search engine work) the web pages.

Here are a few advantages of XML sitemap, if optimized as per Google webmaster guidelines,

- helps in crawling & fast indexing to the depth of websites with huge web pages (1000+).

- assist crawlers in discovering new web pages that are published

- identify the changes made in the content of existing pages, and index them for better performance.

- even if your website has weak internal links, backlinks, or poor website architecture, an XML sitemap helps identify new web pages.

- you can also submit “noindex” web pages in an XML sitemap for rapid deindexation of the web pages compared to the Google search console removal tool.

What Is an XML Sitemap with Example?

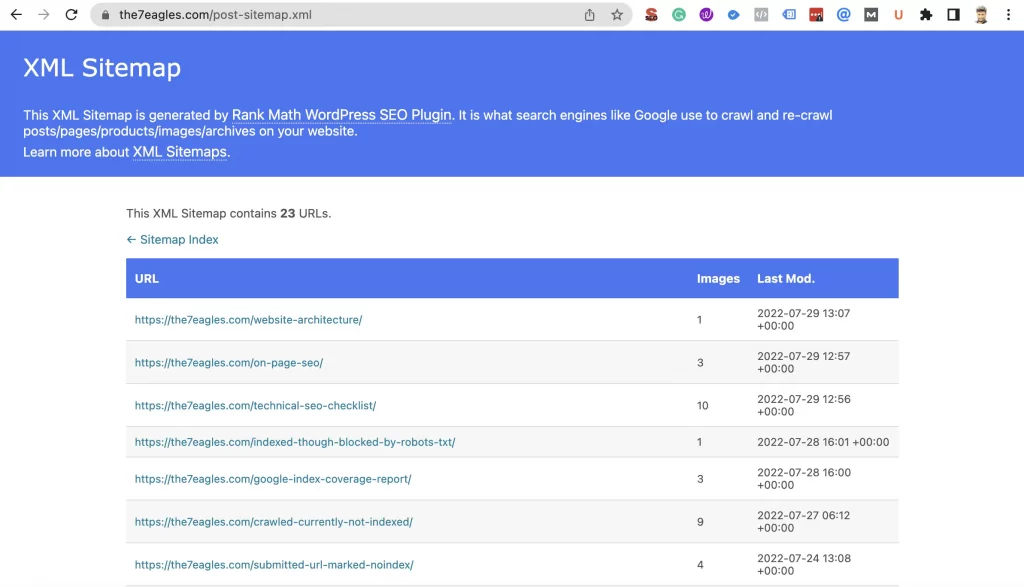

As said in the previous section, the XML sitemap consists of all URLs to be indexed. This sitemap is readable by search engine crawlers. The structure of an XML sitemap appears in the above image.

Here are the XML sitemap formats you should know about:

- loc Tag,

- lastmod Tag,

- priority Tag.

Loc tag describes the location of the web page in the website, eventually, the URL (uniform resource locator).

This is one of the web page’s important tags (canonical version).

Loc (location) tag should contain the URL’s proper protocol and subdomains.

For example,

- if your website’s primary subdomain is excluded from www., then you should exclude all the URLs with www,

- on the other hand, if your website targets https to index, then all the URLs should be HTTPS, and HTTP shouldn’t be present.

For any international targeting web pages you should implement hreflang handling in the XML sitemap by using xhtml:link attribute.

This helps crawlers to identify the language and region of the webpage.

Loc Tag:

Loc tag describes the location of the web page in the website, eventually, the URL (uniform resource locator).

This is one of the web page’s important tags (canonical version).

Loc (location) tag should contain the URL’s proper protocol and subdomains.

For example,

- if your website’s primary subdomain is excluded from www., then you should exclude all the URLs with www,

- on the other hand, if your website targets https to index, then all the URLs should be HTTPS, and HTTP shouldn’t be present.

For any international targeting web pages you should implement hreflang handling in the XML sitemap by using xhtml:link attribute.

This helps crawlers to identify the language and region of the webpage.

Lastmod Tag:

This tag is also an important for crawlers, as it provides the details on the last modification date and time of the web page.

Google search advocate has confirmed over a tweet that loc, and lastmod tag are important for crawlers for better crawl accessibility.

This tag is also an important for crawlers, as it provides the details on the last modification date and time of the web page.

Google search advocate has confirmed over a tweet that loc, and lastmod tag are important for crawlers for better crawl accessibility.

The content site owner should know their last modified time is an important factor as Google determines who the original publisher is.

Google loves the freshness of the content, and this lastmod tag provides details on the freshness.

Practicing on black hat guidelines to change the lastmod tag for shouting as fresh content would penalize your website.

Priority Tag

Google has confirmed that priority tag is not an important tag in sitemap.xml.

This provides the detail on priority to crawl the web pages on ranking order from 0.1 to 1.0.

What Are the Types of Sitemap?

XML Sitemap Index:

This is an important sitemap every website should generate.

The index XML sitemap can have up to 50,000 URLs or uncompressed of up to 50 MB.

When it exceeds 50 MB, you can compress the sitemap, which will resemble sitemap.xml.gz. This helps in saving the bandwidth of the server.

When URLs exceed 50,000, it’s time to split the index sitemap into various categories. The sub-categorized can be pages, posts, categories, products, etc.

Here is what the URL of the sitemap looks like:

https://yourdomain.com/sitemap.xml

https://yourdomain.com/post-sitemap.xml

https://yourdomain.com/page-sitemap.xml

https://yourdomain.com/category-sitemap.xml

https://yourdomain.com/product-sitemap.xml

You should submit all the XML sitemaps in the Google search console or the bing webmaster tool. This helps the crawler to discover and crawl all the web pages on the website.

Next, you need to submit all the XML sitemaps in Robots.txt, as it allows crawlers to read all the web pages. Only submit the verified sitemaps.

XML Image Sitemap:

This type of XML sitemap is used for better indexing of image files. Image SEO holds an important role in overall organic traffic.

In addition to XML image sitemap, many webmasters optimize web pages with Image schema markup. This helps in image callout extension in SERP, which pulls high CTR.

Image XML sitemap looks as follows:

<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9"

xmlns:image="http://www.google.com/schemas/sitemap-image/1.1">

<url>

<loc>http://yourdomain.com/</loc>

<image:image>

<image:loc>http://yourdomain.com/home.webp</image:loc>

</image:image>

<image:image>

<image:loc>http://yourdomain.com/services.webp</image:loc>

</image:image>

</url>

<url>

<loc>http://yourdomain.com/page1</loc>

<image:image>

<image:loc>http://yourdomain.com/picture.webp</image:loc>

</image:image>

</url>

</urlset>

XML Video Sitemap:

This type of XML sitemap is used for better indexing of video files. Video SEO holds an important role in overall organic traffic.

In addition to XML image sitemap, many webmasters optimize web pages with video schema markup. This helps in image callout extension in SERP, which pulls high CTR.

The video XML sitemap looks as follows:

<url>

<https://yourdomain.com/>

<loc>https://yourdomain.com/</loc>

<SEO services and package>

<video:video>

<video:title>SEO services and package</video:title>

<video:content_loc>

http://streamserver.yourdoamin.com/seo-video.webm</video:content_loc>

</video:video>

</url>

Google News Sitemap:

These XML sitemaps should be generated and submitted by websites that have approval for Google news.

This XML sitemap can hold up to 1000 URLs per sitemap. So, update frequently with contents.

Google includes the URL of the website that has been published in the last two days. This sitemap removes the web page that is older than 2 days.

So, to keep your Google news sitemap busy, you should publish articles every 2 days, or else it will notify the Google search console as the Google news sitemap is empty.

HTML Sitemap:

The HTML sitemap helps humans to access the contents of the website. Crawlers or search engine bots don’t access HTML sitemaps.

When you have a website size of 10000+ URLs or a big e-commerce website, HTML helps your visitors navigate the web pages easily.

Apart from this, it doesn’t have many SEO benefits as it doesn’t transmit any link equity as internal linking does.

How to Optimize Sitemap.xml for Better SEO Practice?

Sitemap.xml is one of the prime technical SEO checklist. It can help crawlers to identify new web pages if optimized.

Here are a few methods to optimize the XML sitemap for better crawl accessibility of the web pages and to avoid unwanted crawl budget wastage.

Create Sitemap.xml:

The first step in optimizing sitemap.xml is to create one. You can just Google “Generate Sitemap.xml” and enter your domain name to generate.

Then, download the XML file, and upload it in the base file of the website.

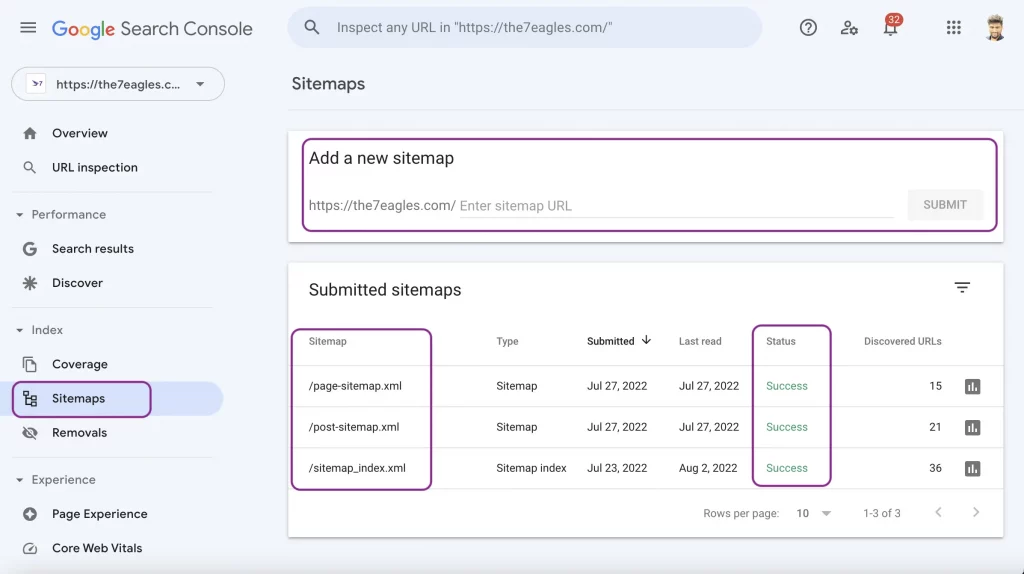

Submit XML sitemap in Google Search Console

The next step is to submit in the Google Search Console. This helps Google bots to discover all web pages.

Once you login to GSC, select the property of the website. Post, direct to Index, followed by sitemap.

Under the section, enter the sitemap URL and submit.

Once submitted, you should check whether the status is success and number of discovered URLs are as the same as your potential web pages.

You can also get other details like the last read by the crawler.

Submit XML sitemap in Robot.txt

The next step is to submit the XML in robots.txt. This commands various crawlers to crawl the sitemap.xml to discover new pages.

Make sure to submit all types of sitemap.xml to robots.txt that need to be indexed.

Only Include the Web Page to be Indexed in Sitemap.xml

The main work in optimizing a sitemap.xml is to include only the potential web pages that are intended for ranking.

Every website is allowed with a crawl budget, i.e., the number of web pages crawlers should visit during an estimated period.

The web pages that are included in sitemap.xml are more important to crawlers like Googlebot.

So, if the sitemap.xml consists of web pages that are not potential will lead to wastage of the crawl budget, this could exclude crawling over new pages.

So, only focus on having web pages that are to be indexed should be submitted in an XML sitemap.

So, you should exclude the pages that have the following issues:

- Duplicate page

- paginated pages

- category pages

- Web pages with confidential details

- noindex pages

- Web pages blocked by robots.txt

- Pages with redirect

- 4xx and 5xx pages

- Archive pages

- Pages that are created for Conversion (Lead form submission)

- Comment URLs

- UTM parameter URLs

For example, if your website has a crawl budget of 500 per period, and your website has 1000 URLs in sitemap.xml. It can only crawl 50% of the web pages.

In this case, if your sitemap.xml is filled with 600 unwanted web pages, the chance of crawling 400 potential websites becomes tedious.

So, remove all the unwanted web pages from the sitemap.xml to optimize the crawl budget.

Example: Some coverage issues like submitted URL marked noindex appear in Google Search Console when a noindex robots directives web page is submitted in sitemap.xml.

You should know that Google will not only crawl your web pages if it’s available in sitemap.xml. It can also crawl using internal links in the website.

Use Your Sitemap To Find Problem With Indexing:

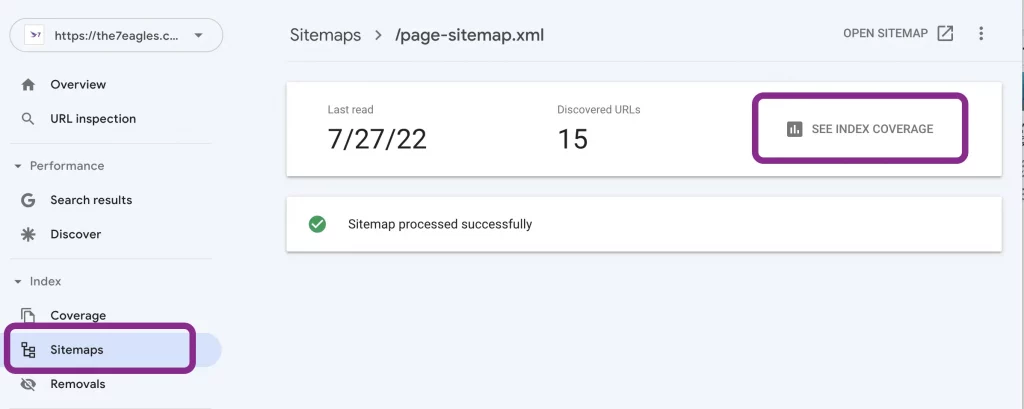

To check the coverage issues of a specific sitemap.xml, click on the chosen one, and you will be displayed the same as the above image.

Just click on see index coverage, and it will direct you to the dashboard below.

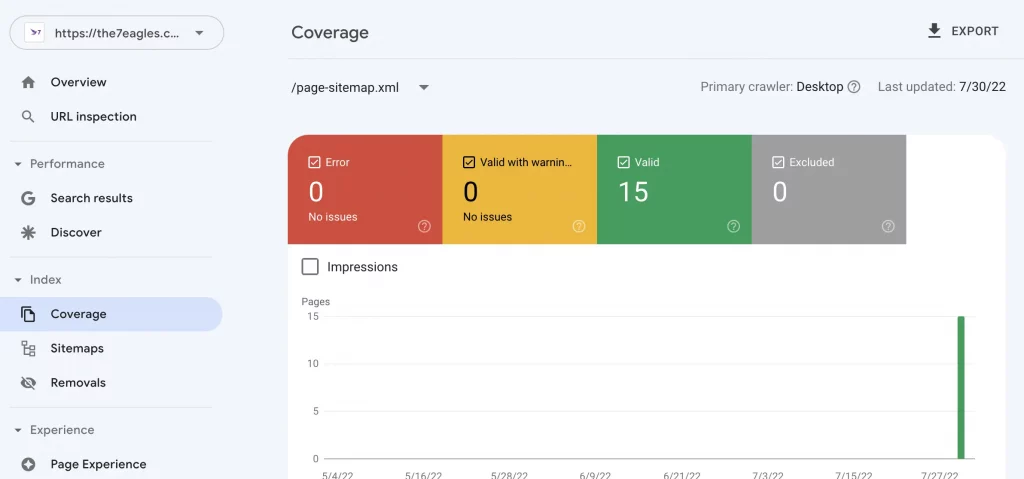

You can see the dashboard with error, valid with warning, valid, and excluded.

If any web pages are marked under any Index coverage report apart from valid, then you must fix the coverage issues.

Besides this, check the Valid web pages. Some web pages could be indexed, not submitted in sitemap.

If you see any web pages under this report, re-generate the sitemap and submit them again.

Sitemap Format that are Supported by Google:

There are few format of sitemaps are supported by Google, and other formats are excluded.

The supported formats are the following:

- XML

- RSS, mRSS, and Atom

- Text

XML:

The XML sitemap is vital in this category, and the complete content is shared based on XML.

sitemap.xml syntax looks as follows. To get your XML sitemap, you should use the address https://yourdomain.com/sitemap.xml

<?xml version=”1.0″ encoding=”UTF-8″?>

<urlset xmlns=”http://www.sitemaps.org/schemas/sitemap/0.9″>

<url>

<loc>https://yourdomain.com</loc>

<lastmod>2022-08-04</lastmod>

</url>

</urlset>

RSS, mRSS, and Atom 1.0

If you have a blog with an feed (RSS/Atom), you can submit the URL as a sitemap. A feed can be created by most blog software, but it only provides information on recent URLs.

CMS like WordPress creates a feed URL by itself, and all the feed URLs are marked under crawled-currently not indexed coverage issue.

- Google crawlers only accept RSS 2.0 and Atom 1.0 feed (version).

- To provide Google with details about your video content, you can use an mRSS (media RSS) feed.

Text

If your sitemap includes only web page URLs, you can provide Google with a simple text file that contains one URL per line. For example,

http://www.example.com/file1.html

http://www.example.com/file2.html

Guidelines for text file sitemaps

- Encode your file using UTF-8 encoding.

- Don’t put anything other than URLs in the sitemap file.

- If the file has a .txt extension, you can name it anything you like (for example, sitemap.txt).