Crawling is the search engine process that discovers and understands the content of new and existing web pages on the internet with the help of their bot or crawlers. This is the first step for a search engine.

Once crawled, the search engines match the web page’s relevancy with their index database before indexing. When it comes to SEO practice, the first step to focus on is to make web pages

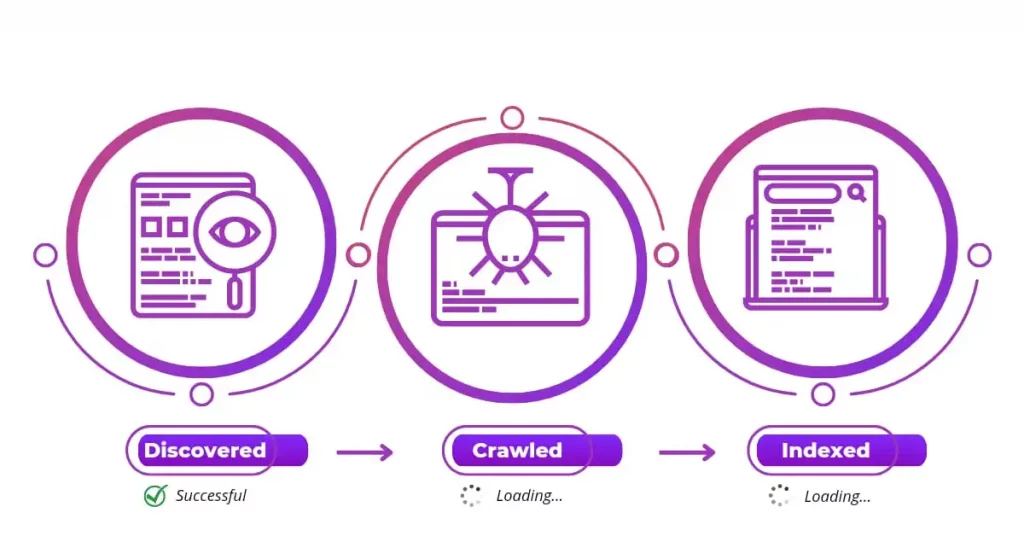

It doesn’t mean search engines should complete crawling if crawlers discover an URL. You might hear of discovered – currently not indexed, and this coverage or page index issue occurs when a URL is discovered, but crawl finds something missed out from crawling.

Recently, Google announced that it would crawl only the first 15 MB of HTML content on any web page. So, it has a set of rules to crawl a web page. It analyzes the quality of the web page. Yes, who wants to spend time with lousy and scrap content?

Search engine crawlers look at a different signal from a web page before attempting to crawl. They are

- web page in XML Sitemap,

- referring to web pages,

- high-quality backlinks refer to the web page,

- web page with compelling content,

- Robots Meta Tag with Index directives,

- Robots.txt allowing with allow directives.

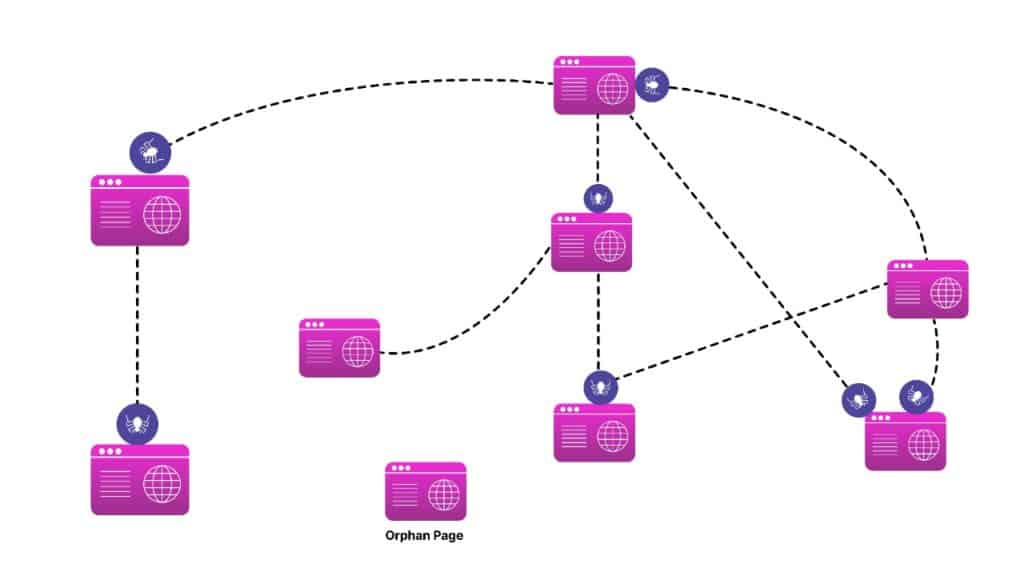

How Do Crawlers Find New Web Pages?

Discovering new web pages is the role of crawling as search engines work is concerned.

The above image provides a clear visualization of how crawlers find every page on a website. Crawlers identify the web page either by an internal link or XML sitemap.

The web page that links to another web page is called a referring page, and the web page referred to is called a linked web page.

The web page that doesn’t have any referring pages is known as an orphan page. Crawlers find it difficult to discover or crawl orphan web pages. To rank in the search engines, the web page should be discovered and then crawled.

It’s not needed for the crawlers to crawl the web page once discovered, as search engines like Google spend a lot of their resources on crawling various web pages on the internet.

What Technology do Search Engines use to Crawl Websites?

The bot is the technology search engines use to crawl websites. They are also known as web spiders, robots, web crawlers, etc.

These bots are automated software programmed by various technology that crawls or scans multiple web pages on the internet.

Web crawlers or Bots reach new web pages when linked to an existing indexed page and follow the directive of the robots tag.

Search engines depend on bots to update and maintain their databases as they keep them accurate. Search engines would be very challenging and daunting without website crawlers. Bots come in a variety of types, each serving a different purpose.

Here are a few Bots and their role:

- Googlebot – Google Crawlers for Desktop

- Googlebot-new – Google News crawlers

- Googlebot-images – Google Image crawlers

- smartphone Googlebot-mobile – Google Bot for Mobile

- Adsbot-Google – Google Ads Bot for Desktop

- Adsbot-Google-mobile – Google Ads Bot for mobile

- Mediapartners-Google – Adsense Bot

- Googlebot-Video – Google Bot for videos

- Bingbot – Crawlers by Bing for both Desktop and Mobile

- MSNBot-Media -Bing bot for crawling images and videos

- Baiduspider – Baidu crawlers for desktop and mobile

- Slurp – Yahoo Crawlers

- DuckDuckBot – DuckDuckGo Crawlers

Besides this, there are many bots used to analyze the behavior of the website. SEO tools like SEMrush and Ahrefs use their web crawlers to crawl and diagnose website errors and other KPIs.

Malware-detecting software uses its crawlers to scan malicious attacks on any website.

What Are the Coverage Issues Caused by Failed Crawling?

Google Index coverage or Page Indexing issues are diagnosed in the Google Search Console (a free SEO tool by Google). One of the coverage issues that is associated with a crawling error is discovered – currently not indexed.

Before ranking, a web crawler must discover, crawl, and index a web page. When a referring page or sitemap finds a web page, it completes the discovery stage.

But, search engines fail to crawl the web page due to various factors. The reasons could be any of the following:

- Heavy server load

- Blocked by Robots.txt

- Duplicate content without proper canonical tag

- Irrelevant Anchor text from the referring web pages

- Bad HTTP Request (4xx, 5xx)

- Crawl Errors

- Brand new website (could be under Google sandbox period)

- Backlinks from spammy websites

- Low word count

- Auto-Generated Content

- Cloaking Content

- Penalized WHOIS history

- Websites involved in Link Scheme

- Orphan Page

In many cases, even the indexed web pages can be excluded by discovered-currently not indexed due to the above reasons.

Key Takeaways:

- Crawling is the process of reading the content (HTML tags, text, images, links, etc) to understand the relevance of the web pages, once the page is discovered.

- The bot is the technology used by search engines to crawl websites. The bot can be also known as web spiders, web crawlers, robots, etc.

- Web spiders discover a new web page with the help of referred pages or XML sitemap.

- Crawling is a mandatory process in SEO practice without them you can’t rank your web pages.

- The first step in SEO as per Mozlow’s hierarchy is crawl accessibility.

- If your website finds errors in crawling important web pages, then book our technical SEO services for an expert solution.